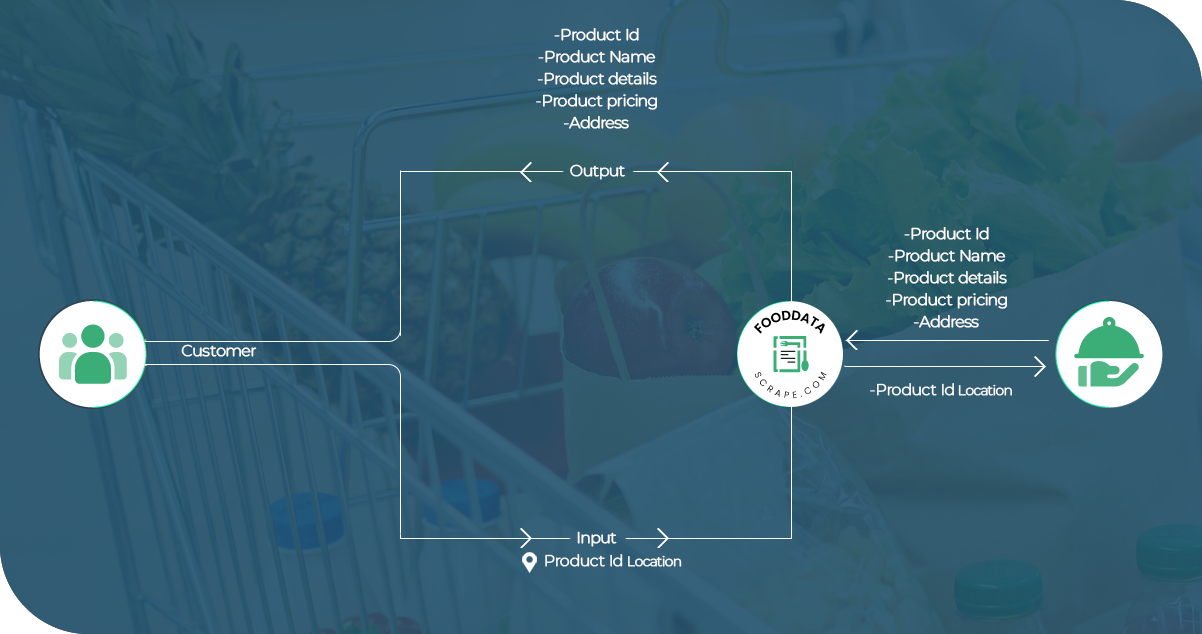

The Client

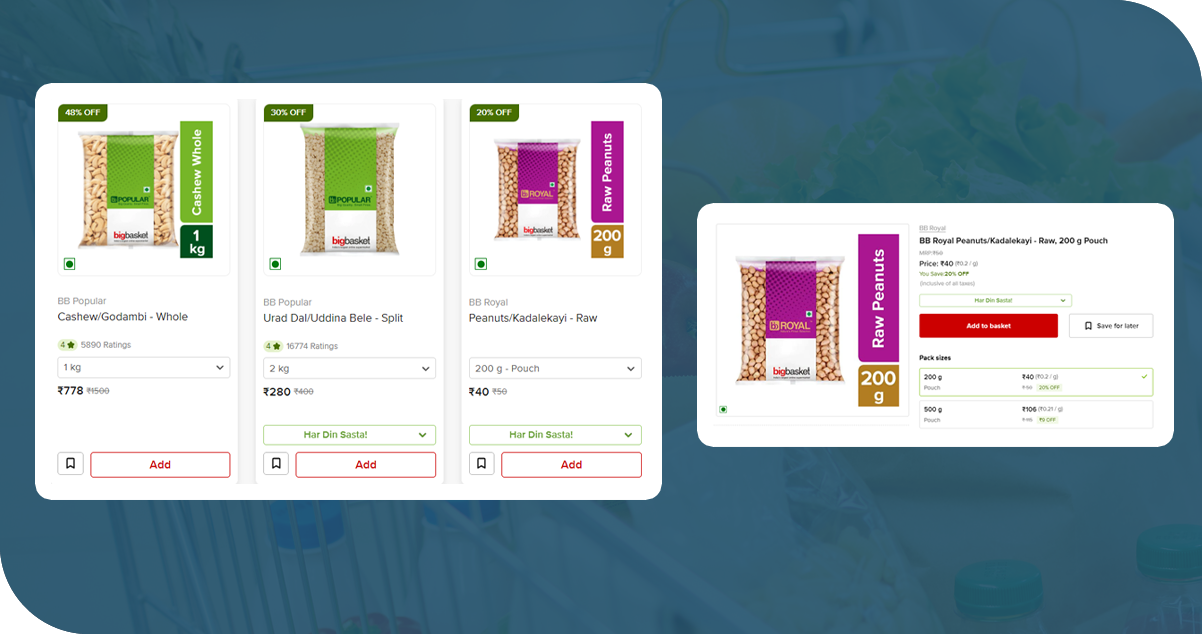

Our client operates in the grocery delivery industry and aims to elevate their online grocery delivery services by utilizing BigBasket data scraping. This strategic move allows them to access crucial market insights, optimize product offerings, and offer their customers an improved and competitive grocery shopping experience.

Key Challenges

BigBasket employs anti-scraping measures to protect its data. These included CAPTCHA challenges, IP blocking, and dynamic website changes, making it challenging to collect data consistently.

Managing the large volume of data and dealing with frequently changing data structures on BigBasket's website required ongoing adjustments to the scraping process, increasing complexity.

Ensuring compliance with BigBasket's terms of service and respecting data privacy laws while scraping posed legal and ethical challenges. Balancing data collection needs with adherence to regulations was a constant concern.

Key Solutions

- Proxy Rotation: We employed a proxy rotation system to evade IP blocking. It allowed us to distribute scraping requests across multiple IP addresses, reducing the risk of detection and blocking.

- Captcha Solving Services: Utilizing captcha-solving services helped us automate handling CAPTCHA challenges, ensuring uninterrupted data collection.

- Dynamic Scraping Techniques: We developed dynamic techniques to scrape BigBasket grocery delivery data that could adapt to website structure changes. It enabled us to extract data even as BigBasket updated its pages consistently.

- Rate Limiting and Throttling: Implementing rate limiting and throttling mechanisms helped us manage scraping requests, preventing overloading of the website's servers and minimizing the risk of being blocked.

- Legal Compliance: Our grocery delivery data scraping services strictly adhered to BigBasket's terms of service and data privacy regulations. It included respecting robots.txt rules, being transparent about our data collection activities, and respecting customer privacy.

Methodologies Used

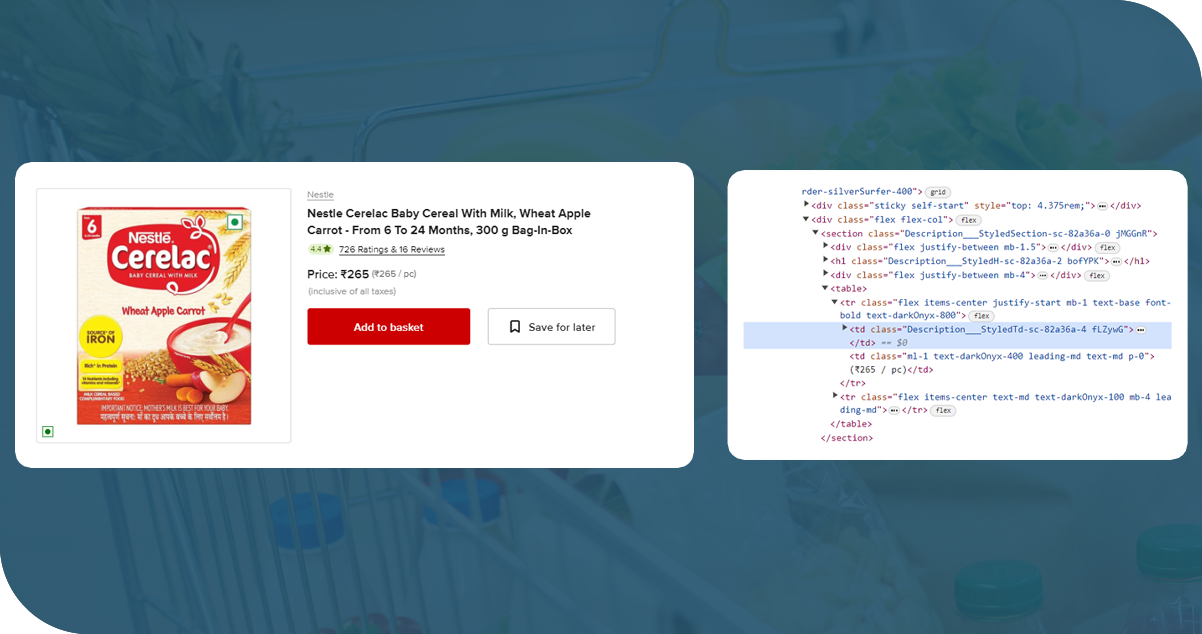

- Web Scraping Frameworks: Utilizing Grocery Delivery Scraping API frameworks like BeautifulSoup (Python) or Scrapy allowed us to systematically parse and extract structured data from BigBasket's web pages.

- Proxy Servers: We employed proxy servers and rotating IP addresses to prevent IP blocking and maintain a low profile while web scraping grocery data. It ensured continuous data collection.

- Headless Browsers: Implementing headless browsers like Puppeteer (for JavaScript-based sites) enabled us to interact with web pages like a real user, overcoming anti-scraping measures.

- Captcha Solving Services: To address CAPTCHA challenges, we integrated third-party captcha-solving services or implemented custom solutions to automate captcha resolution.

- Data Parsing and Transformation: We developed custom scripts to parse and transform the scraped data into structured formats (e.g., JSON or CSV) for easy analysis and storage.

- Scheduled Scraping: We set up scheduled scraping tasks to regularly collect data from BigBasket, ensuring that our dataset remained up-to-date and reflective of real-time changes on the platform.

Advantages of Collecting Data Using Food Data Scrape

Efficiency: Food Data Scrape can automate collecting grocery data from various sources, including websites, price comparison platforms, and online marketplaces. This automation saves time and reduces the need for manual data entry.

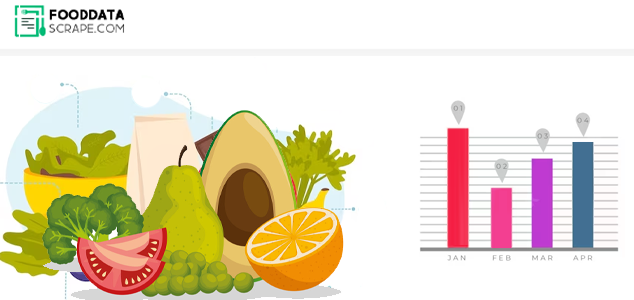

Real-Time Updates: These services can provide real-time updates on product availability, prices, and promotions. This information is crucial for businesses to stay competitive and make informed pricing and inventory decisions.

Expertise: The company specializes in web scraping techniques and has skilled professionals experienced in extracting data efficiently and accurately.

Efficiency: They can automate the data collection, saving time and resources compared to manual scraping efforts.

Scalability: They can handle large-scale data extraction tasks, ensuring you can obtain extensive datasets for in-depth analysis.

Data Quality: Professionals ensure that the scraped data is clean, accurate, and formatted correctly, reducing errors in your analysis.

Compliance: They are well-versed in legal and ethical aspects of web scraping, helping you avoid potential legal issues by adhering to terms of use and regulations.

Timeliness: They can provide real-time or scheduled data updates, allowing you to access the latest information for decision-making.

Customization: They can tailor scraping solutions to your requirements, ensuring you get the data you need in the preferred format.

Focus on Core Competencies: Outsourcing scraping tasks allows you to concentrate on your core business activities while experts handle data acquisition.

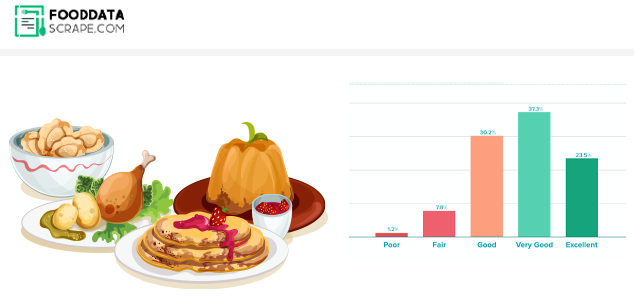

Enhanced Customer Experience: Access to accurate and up-to-date grocery data allows businesses to provide better customer experiences. Customers can easily find the products they want at competitive prices, leading to increased satisfaction and loyalty.

Final Outcome: Finally, we successfully scraped grocery data from BigBasket, which enabled us to provide valuable assistance to our client. We assisted our client in gaining a competitive edge in the grocery industry by harnessing this data to refine their product selection, optimize pricing strategies, and improve overall business operations.