Client

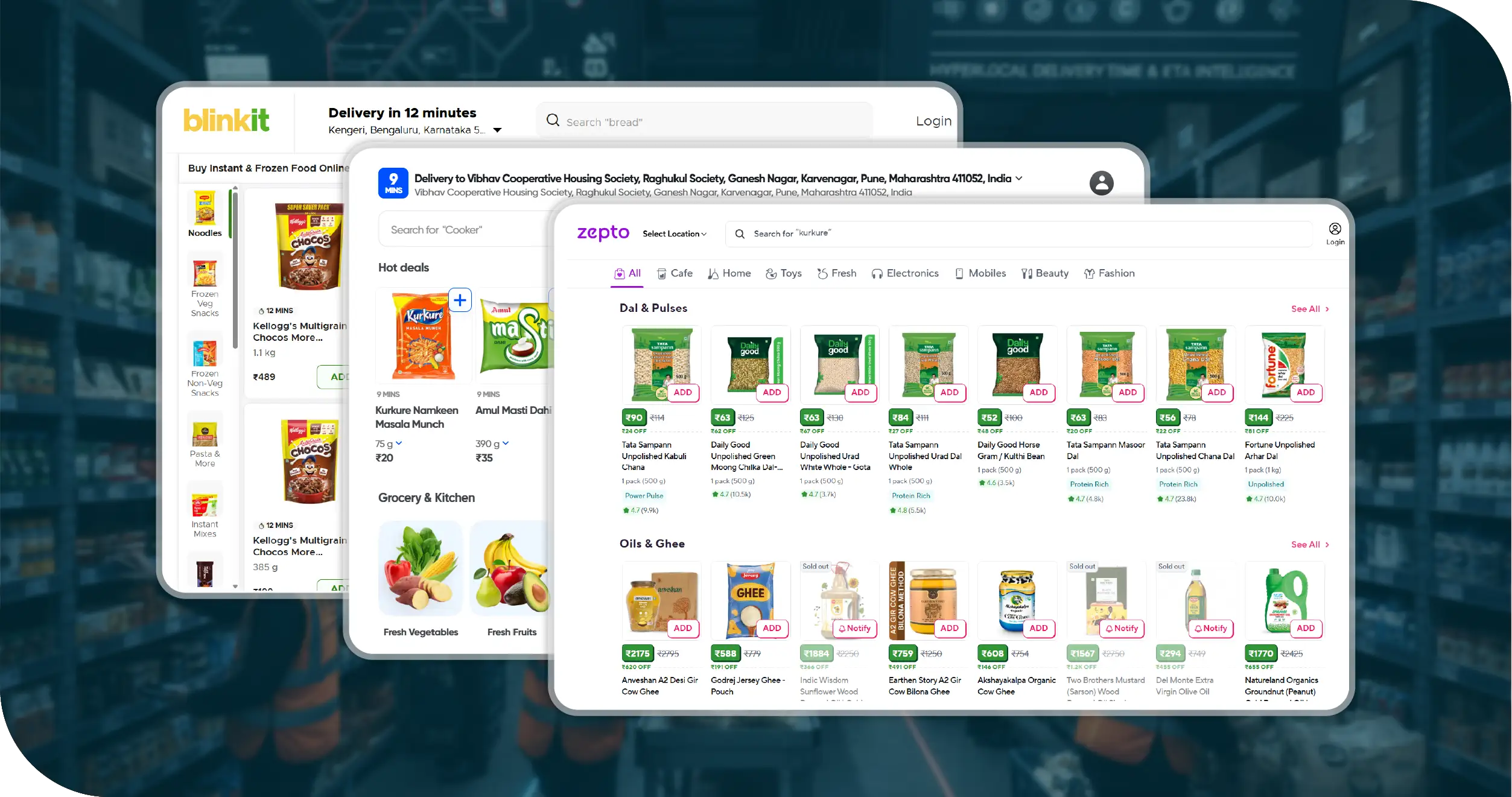

The client, a fast-growing retail intelligence company, required a scalable system for Darkstore Product Mapping from Swiggy Instamart, Zepto & BlinkIt to support their competitive analytics workflows. Their internal teams were unable to Scrape Instamart, Zepto & BlinkIt Darkstore Pricing and Stock Data at the scale needed for daily reporting. They wanted reliable datasets produced through a Darkstore Product Data Scraper from Instamart that could auto-update, detect catalogue changes, and maintain 100% SKU accuracy. They approached us to build a dependable solution with structured outputs that aligned with their analytics models, dashboards, and decision-support tools.

Key Challenges

- Frequent Platform Layout Changes on Zepto: The client needed to Scrape Darkstore Product Data from Zepto, but the platform frequently updated layouts, blocking earlier crawlers and causing data gaps that disrupted pricing intelligence workflows and reduced the accuracy of comparative SKU analysis across monitored darkstores.

- High-Frequency Inventory Updates on BlinkIt: They struggled to Extract Darkstore Product Data from BlinkIt at scale, as inventory updates occurred multiple times daily, making it difficult to capture real-time price changes, stock-outs, and dynamic product variations across multiple store locations.

- Limited Internal Expertise in App Data Scraping: Their team lacked internal expertise in Grocery App Data Scraping services, resulting in inconsistencies in product mapping, missing fields, unstable scripts, and delays in producing daily datasets required by their analytics and supply-chain intelligence team.

Key Solutions

- Robust Real-Time Scraping Pipeline: We built a robust pipeline using Grocery Delivery Scraping API Services, capable of handling dynamic HTML, API-based extraction, and rotating proxies to ensure uninterrupted real-time data collection across all targeted darkstores without workflow failures.

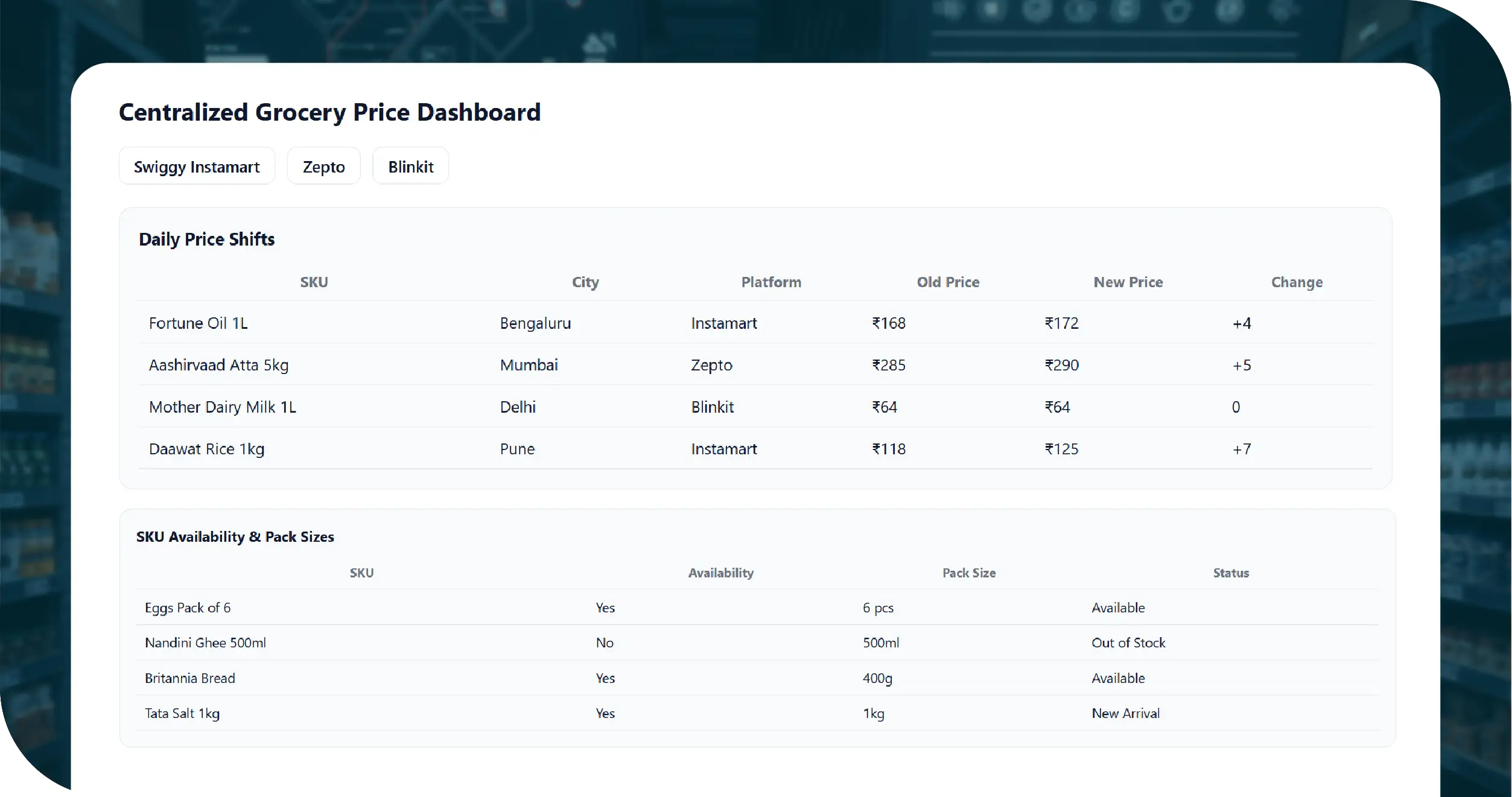

- Centralized Grocery Price Dashboard: We implemented an automated Grocery Price Dashboard allowing centralized monitoring of daily price shifts, SKU availability, pack-size variations, and product additions or removals, helping the client visualize insights instantly across multiple cities.

- Real-Time Price Tracking Dashboard Integration: Our system integrated a live Grocery Price Tracking Dashboard which captured time-stamped changes, offering continuous tracking of stock-outs, promotions, and replenishments while delivering structured JSON outputs aligned with the client’s BI ecosystem.

Sample Data Table

| Darkstore | SKUs Tracked | Cities Covered | Daily Updates | Price Change Events |

|---|---|---|---|---|

| Instamart | 4,200 | 18 | 6 | 1,240 |

| Zepto | 3,950 | 15 | 5 | 1,110 |

| BlinkIt | 4,500 | 20 | 7 | 1,380 |

Methodologies Used

- Modular and Resilient Data Pipelines: Implemented structured data pipelines using modular crawlers capable of handling dynamic interfaces, ensuring consistent extraction across multiple platforms without failure during UI or endpoint-level changes.

- Automated and Timely Extraction Schedules: Designed automated schedulers that executed extraction cycles at predefined intervals, capturing every relevant update without missing crucial real-time changes in darkstore inventories.

- Standardized Data Normalization Framework: Utilized data normalization processes to unify extracted fields, ensuring standardized SKU names, prices, pack sizes, and inventory statuses suitable for analytics, dashboards, and modeling.

- Multi-Layer Quality and Accuracy Validation: Ensured multi-layer validation checks to cross-verify data completeness, detect anomalies early, and maintain consistently high dataset accuracy across all marketplaces.

- Seamless BI and API Integration Delivery: Delivered datasets in multiple formats, integrating smoothly with the client's BI tools while supporting seamless API ingestion for internal applications.

Advantages of Collecting Data Using Food Data Scrape

- High-Accuracy, Real-Time Data Delivery: We provide highly accurate, real-time datasets across major quick-commerce platforms, capturing every price change, stock update, SKU variation, and product movement.

- Fully Automated and Reliable Data Updates: Our automated extraction system eliminates manual effort by running continuous update cycles, ensuring uninterrupted data flows and consistent darkstore intelligence.

- Scalable Infrastructure for Large SKU Volumes: We offer highly scalable solutions capable of tracking thousands of SKUs across multiple cities and platforms without performance issues.

- Powerful Dashboards for Clear Insights: Our custom dashboards enhance visibility by presenting real-time price changes, inventory updates, and SKU movements clearly for instant interpretation.

- Robust Monitoring for Maximum Stability: We maintain 24/7 monitoring systems with automated alerts and failover mechanisms to guarantee stable, uninterrupted data extraction.

Client Testimonial

“As the Head of Analytics, I can confidently say this partnership transformed our entire competitive intelligence workflow. The depth and accuracy of the datasets exceeded expectations. Their automated darkstore scraping system helped us monitor pricing, availability, and inventory shifts across multiple cities with remarkable precision. Our internal reporting improved dramatically, and we gained insights that were previously impossible to capture manually. The dashboards and structured outputs blended seamlessly with our BI systems, reducing hours of manual work. This solution has become a cornerstone of our pricing, merchandising, and procurement operations.”

Director of Data & Analytics

Final Outcome

Our collaboration empowered the client with unparalleled visibility into quick-commerce product ecosystems, supported by Grocery Pricing Data Intelligence that transformed how their teams monitored competitors and managed internal workflows. By delivering standardized, time-stamped Grocery Store Datasets, we enabled them to automate their intelligence operations, eliminate manual errors, improve SKU mapping accuracy, and dramatically speed up decision-making. The project resulted in real-time insights across thousands of SKUs, multiple cities, and all three leading platforms. Their analytics, pricing, and procurement teams now operate with data-driven clarity, improving market response time and strategic planning.