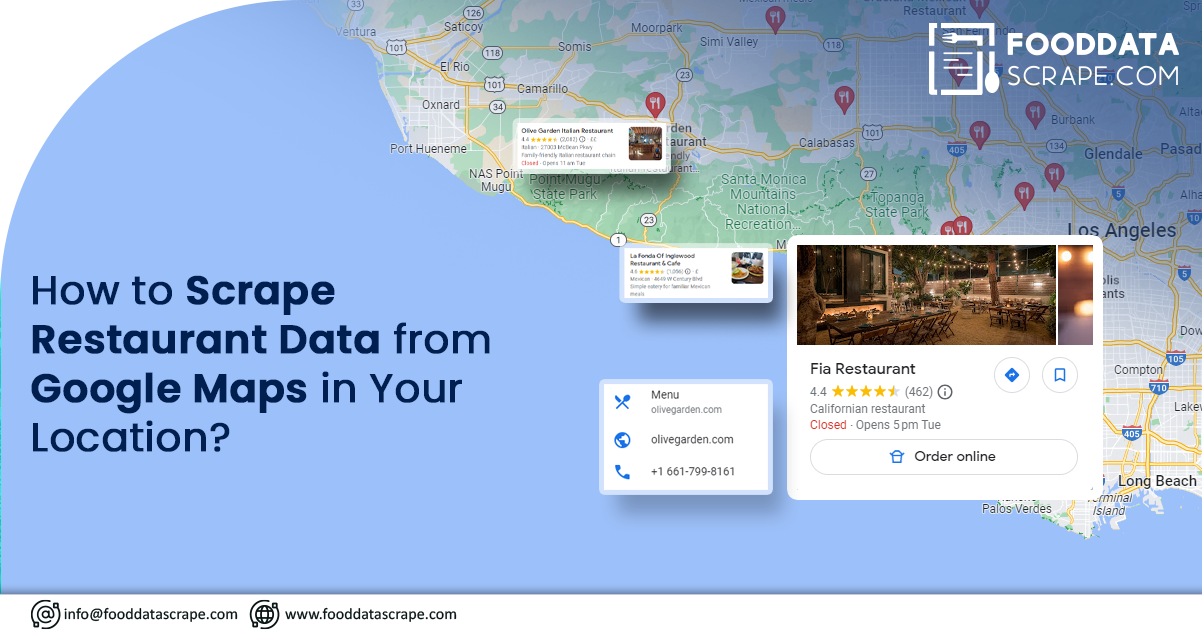

Since its launch in 2005, Google Maps has evolved beyond simple navigation, offering various functionalities beyond point-to-point directions. Its versatile features encompass satellite imagery, real-time traffic updates, aerial photographs, and diverse route planning options spanning bikes, cars, and public transport. Additionally, it is a valuable resource for discovering local restaurants and gathering information about them. Scraping Google Maps data empowers restaurants to access essential food-related information.

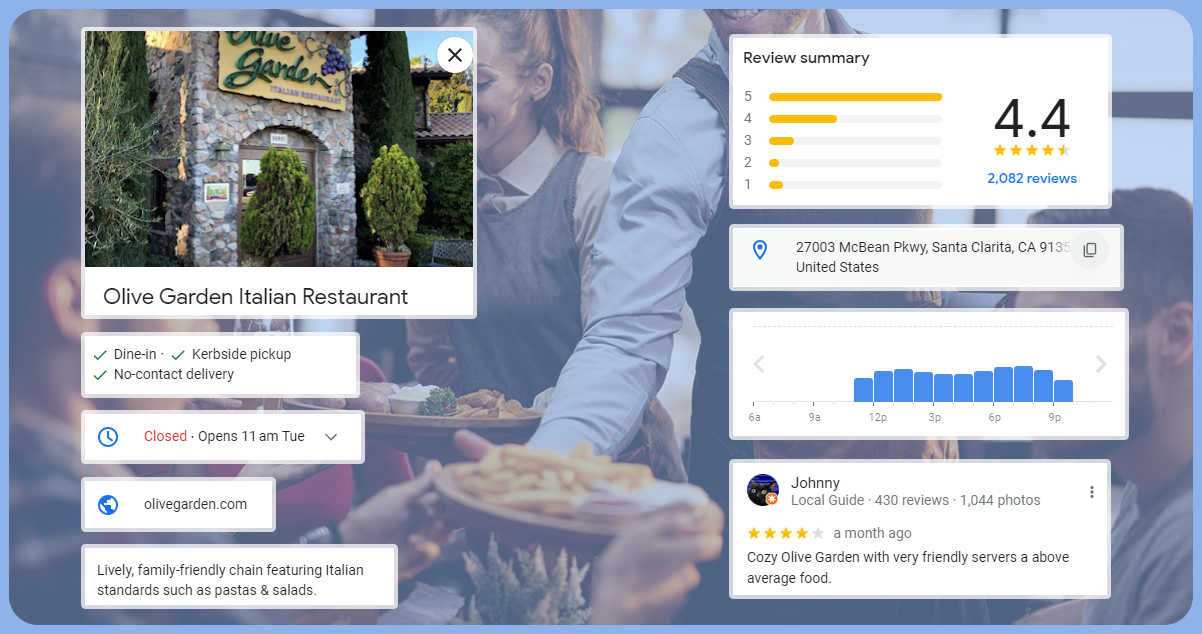

Imagine you've recently relocated to a new city and are searching for an excellent nearby restaurant. Typically, you would type the keyword "restaurants" into Google Maps to discover options that align with your budget and boast excellent reviews. Google Maps also provides access to restaurant operating hours and allows users to edit information to reflect any changes accurately. Collecting and storing data points, including customer reviews, can prove immensely beneficial if done systematically and organized in usable formats.

In this article, we'll develop a scraping program to extract restaurant data from Google Maps for a specific location. When run, the program will prompt users for the specific city they want to retrieve restaurant data from and the number of pages to scrape. It will gather information such as restaurant names, ratings, the number of Google reviews, locations, phone numbers, and opening hours. Finally, we'll convert the collected data into an Excel format for easier reading.

The Importance of Having a List of Restaurants and Their Contact Information

A restaurant's menu is the initial point of contact with potential customers, allowing them to explore offerings from their homes before deciding to dine in. It's vital to provide a menu that offers perceived value, with a wide range of choices, customization options, and creative offerings to attract patrons. But how can you determine the menu that resonates best with your audience? The answer lies in leveraging restaurant menu scraping and analyzing online menus.

A restaurant scraping tool can provide a competitive edge. Focus on establishments with high foot traffic and positive reviews, and consider extracting data from web pages that consolidate menus. Assess which dishes are top sellers by studying dish names and descriptions that appeal to your target audience. Additionally, it analyzes how to create a well-balanced selection across different courses.

However, before delving into restaurant data scraping, it's crucial to understand your target audience by creating buyer personas outlining their consumption habits and preferences. Specific restaurants may cater to distinct groups, such as vegetarians, vegans, health-conscious individuals, or fine dining enthusiasts; age groups also play a significant role.

While you might have intuitive ideas about what to include or exclude, data-supported insights into how people interact with menus at other restaurants are invaluable. A restaurant data scraper can be your crucial advantage in this endeavor.

If this seems overwhelming, remember that restaurant data scraping services are available to assist you in navigating this process efficiently.

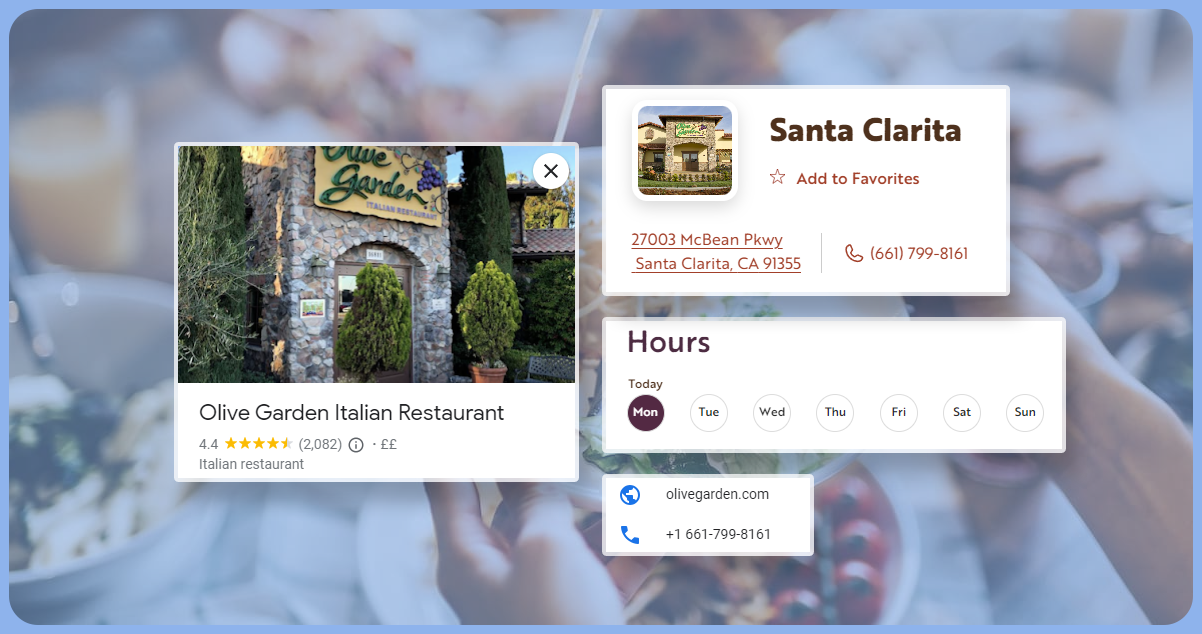

List Of Data Fields

- Restaurant Name

- Food Menu

- Food Range

- Ratings

- Total Reviews

- Price Range

- Establishment Type

- Complete Address

- Restaurant Timing

- Contact Details

- Website, if available

- Photos

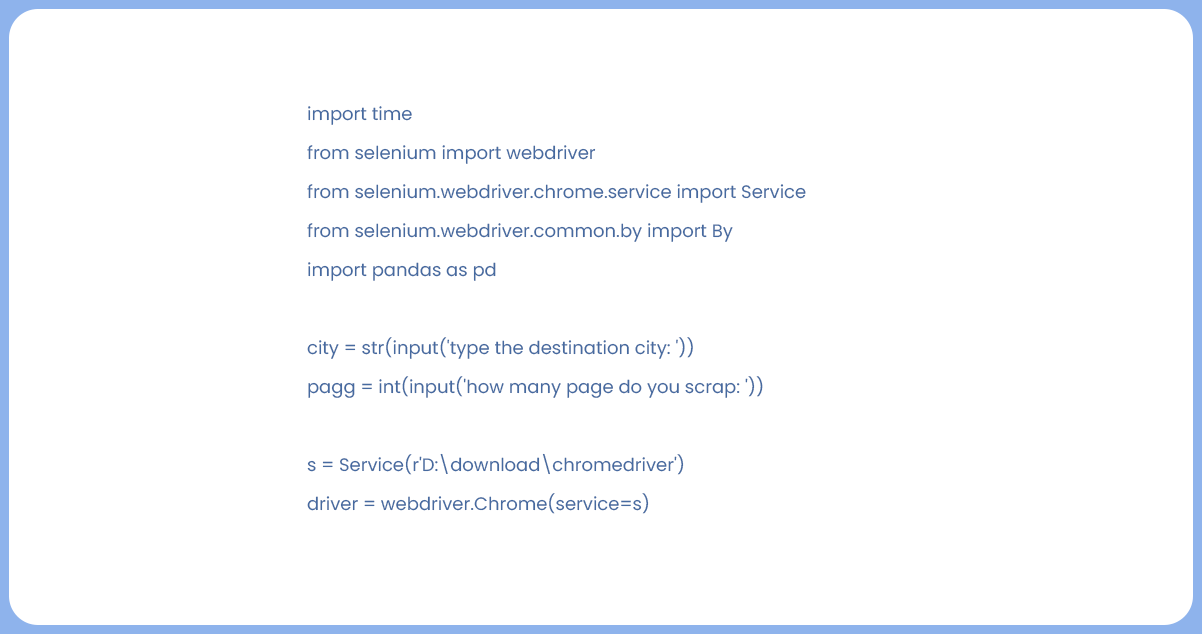

To scrape restaurant data from Google Maps in your location, we'll need to install the necessary packages: selenium and webdriver for web scraping, and pandas and openpyxl for creating an Excel file to store the data. Additionally, we'll import these packages, along with the 'time' package, to handle object appearance delays.

Next, we'll create two variables: one for the city name (string input) and another for the number of pages to extract restaurant data from Google Maps. Then, we'll set up a variable to hold the service package derived from the selenium package, specifying the path to the downloaded webdriver program. Lastly, we'll create a variable to store the webdriver package. Since we're using Chrome as our selenium browser, we'll configure Chrome to use the 's' variable we created earlier as the location of our webdriver file, enabling webdriver access. It represents the initial stage of our code.

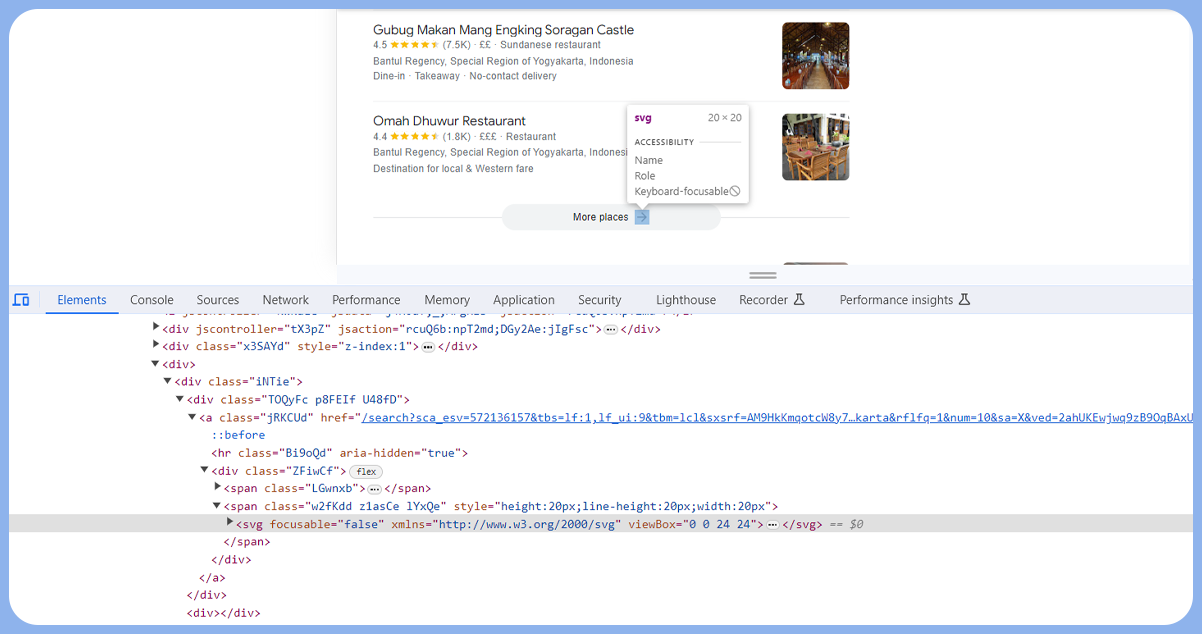

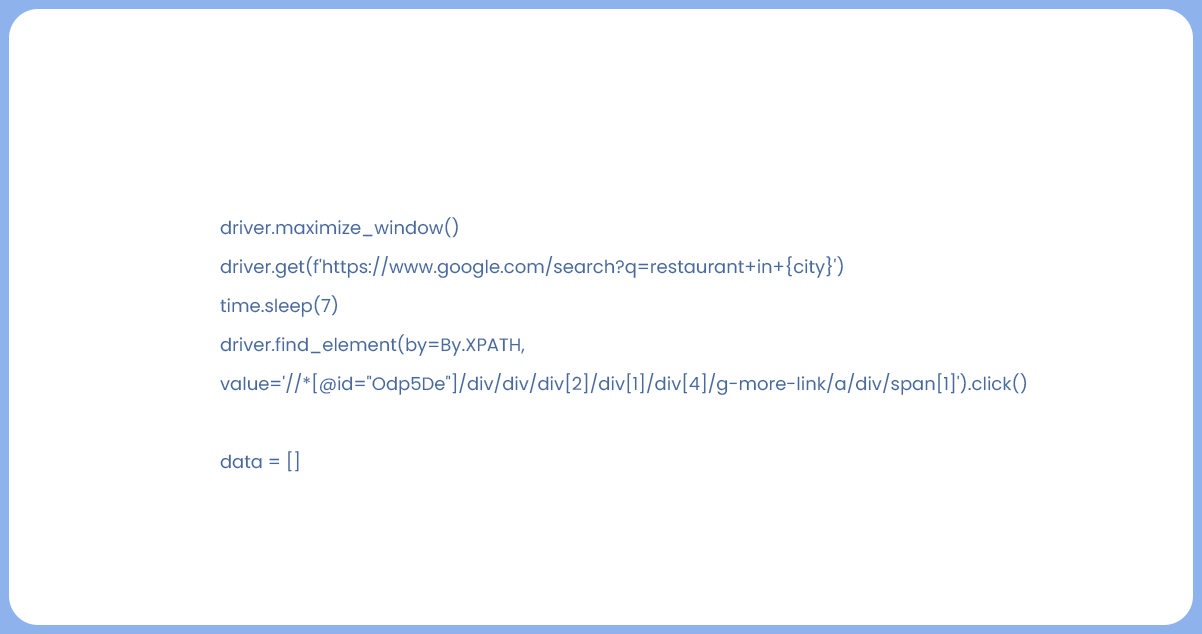

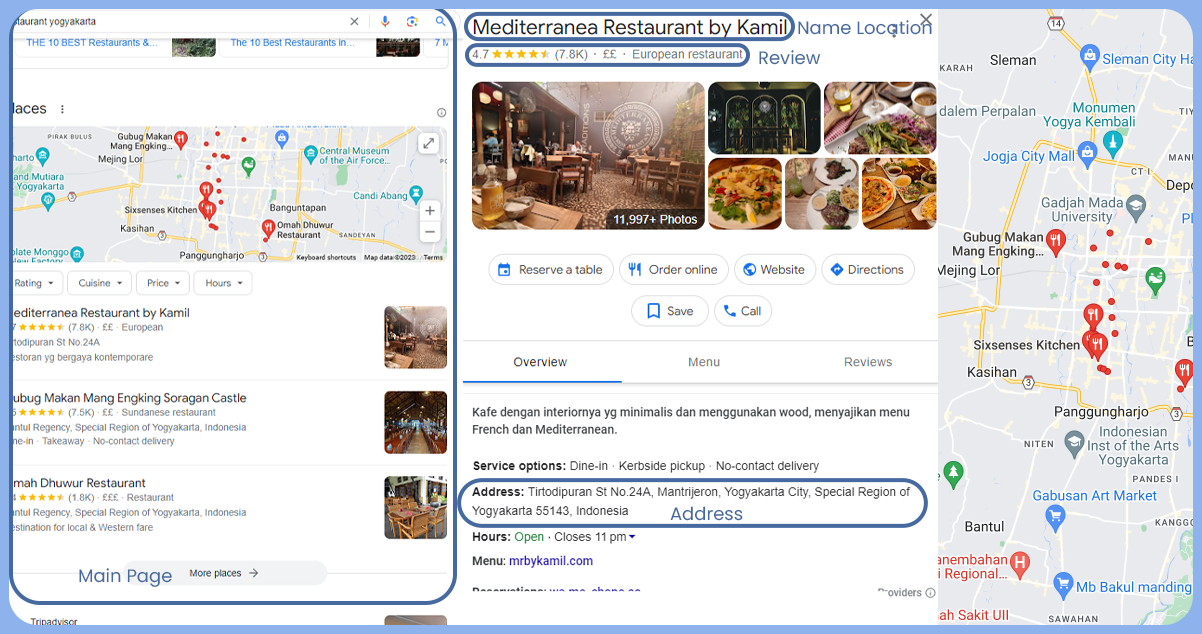

Continuing with our selenium program, we initiate it by navigating to the restaurant URL, which we have modified by incorporating the 'city' variable into the URL. Subsequently, we incorporate the 'sleep' function from the 'time' package, pausing our program for 7 seconds to ensure the webpage fully loads. During this time, we locate the click button (as shown in the image) using an XPath search conducted with the 'find element' feature of the webdriver. Identify the XPath as the one ending with the 'click-to-press' button.

Finally, we create a 'data' variable initialized as an empty list. This 'data' variable serves as a container for storing the results of our scraping efforts and then extracting them into an Excel file.

In the second stage of our code, we execute our Selenium program. We navigate to the restaurant URL, which we've customized by incorporating the 'city' variable into the URL. Then, we introduce a pause using the 'sleep' function from the 'time' package, delaying our program for 7 seconds to ensure proper webpage loading.

During this pause, we locate the click button (as illustrated in the image) using an XPath search conducted with the 'find element' feature of the webdriver. Identify the XPath as the one ending with the 'click-to-press' button.

We create a 'data' variable initialized as an empty list to store the results of our Google Map restaurant data scraping. This 'data' variable is a container for the scraped data, which we'll extract into an Excel file.

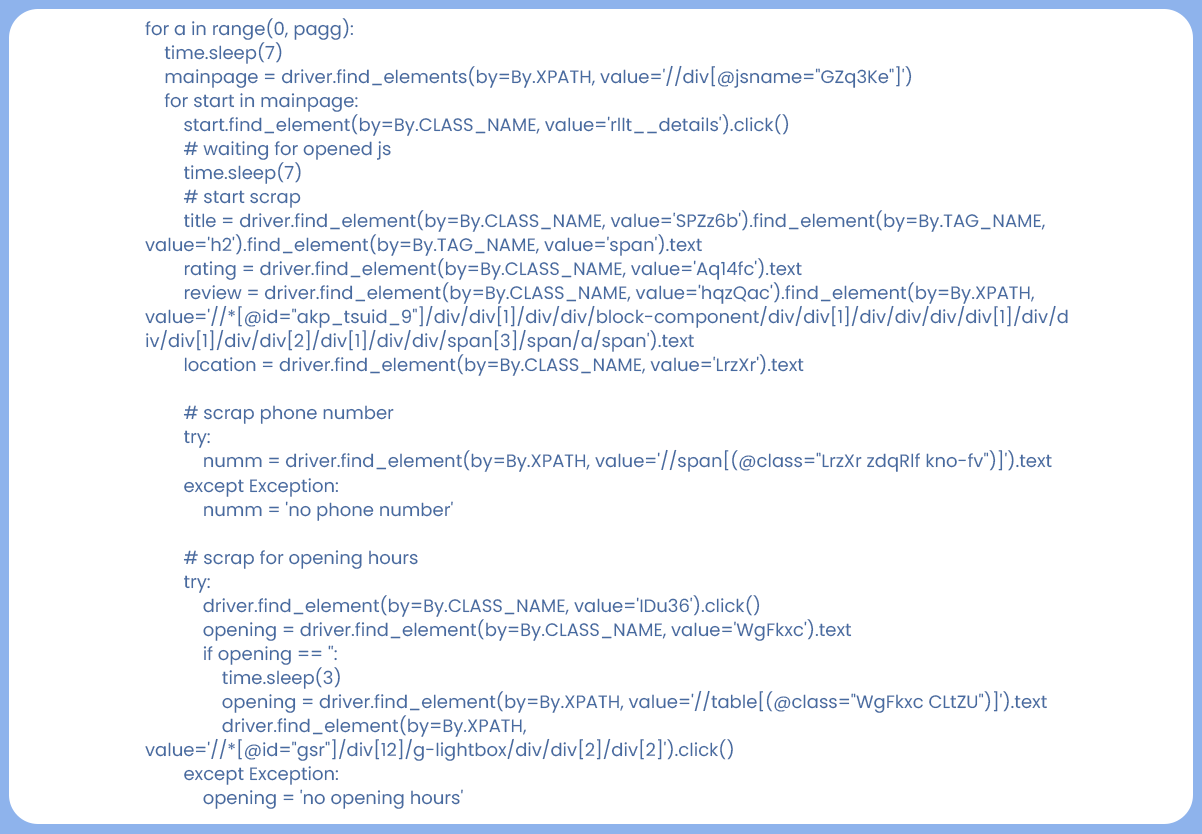

Now that we're on Google Maps, it's time to scrape the essential data. We create a for loop that iterates from zero to the 'page' variable's value to accomplish this. This looping mechanism enables us to conduct scraping across multiple pages if desired.

We implement a 7-second pause within the loop using the 'sleep' function to ensure proper webpage loading before proceeding. Following this, we proceed to scrape all the necessary data, as depicted below:

Continuing with our data extraction process, as illustrated in the image above, we initiate the first step by clicking on the main page element using XPath search. Subsequently, we establish another loop designed to retrieve the required data.

Within this loop, we click on the main page, pause the program for 7 seconds to ensure the webpage loads fully, and then proceed to collect restaurant name, rating, review, and location data.

We employ a try-except block for telephone number data to handle potential errors that may arise if a restaurant lacks a phone number. In the try section, we attempt to extract the telephone number. If it's unavailable, we handle the exception and assign a value of "no telephone number."

Regarding restaurant opening hours data, the collection process differs from previous data extraction steps due to variations in data format. Similarly, we use a try-except block. We click the clock icon in the try section to access the opening hours data. If it doesn't exist, we employ an alternative method. In the exception section, we assign the "no opening hours" value if the data is unavailable.

It represents the third stage of our code.

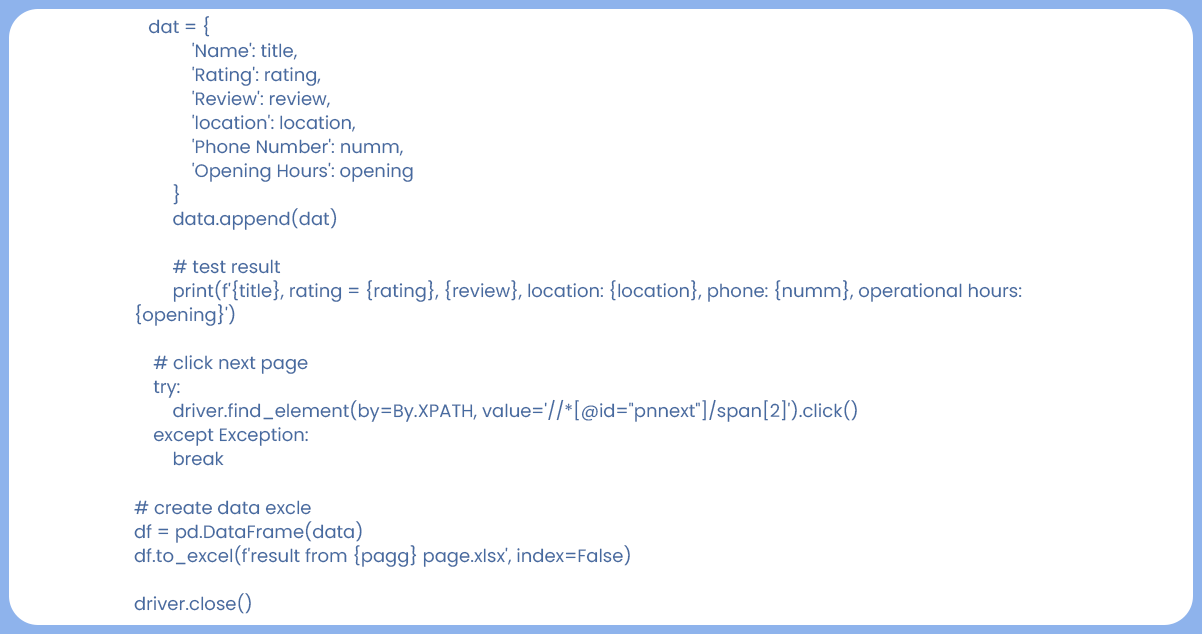

In the final stage, we create a variable with a dictionary data type to store the scraped data. This variable will accumulate the results of our scraping, which we'll then append to the previously created list variable ('data'). Optionally, we can print the extracted data to verify its accuracy.

In the first loop, we establish a function that clicks the "next page" button, if it exists, using a try-except block to handle potential exceptions.

Lastly, we export the collected data into an Excel file using the pandas package after scraping. The file is named based on the number of pages we've scraped using the 'page' variable we defined earlier.

It concludes our final code, allowing us to efficiently scrape restaurant data from Google Maps and store it in organized Excel.

The program executes as intended, efficiently gathering restaurant data, including names, ratings, review counts, telephone numbers, and opening hours for locations of our choice.

For more in-depth information, feel free to contact Food Data Scrape today! We're also here to assist you with any of your needs related to Food Data Aggregator and Mobile Restaurant App Scraping services. We also provide advanced insights and analytics that offer valuable data-driven perspectives to drive informed decision-making and enhance business strategies.

Get in touch

We will Catch You as early as we recevie the massage

Trusted by Experts in the Food, Grocery, and Liquor Industry