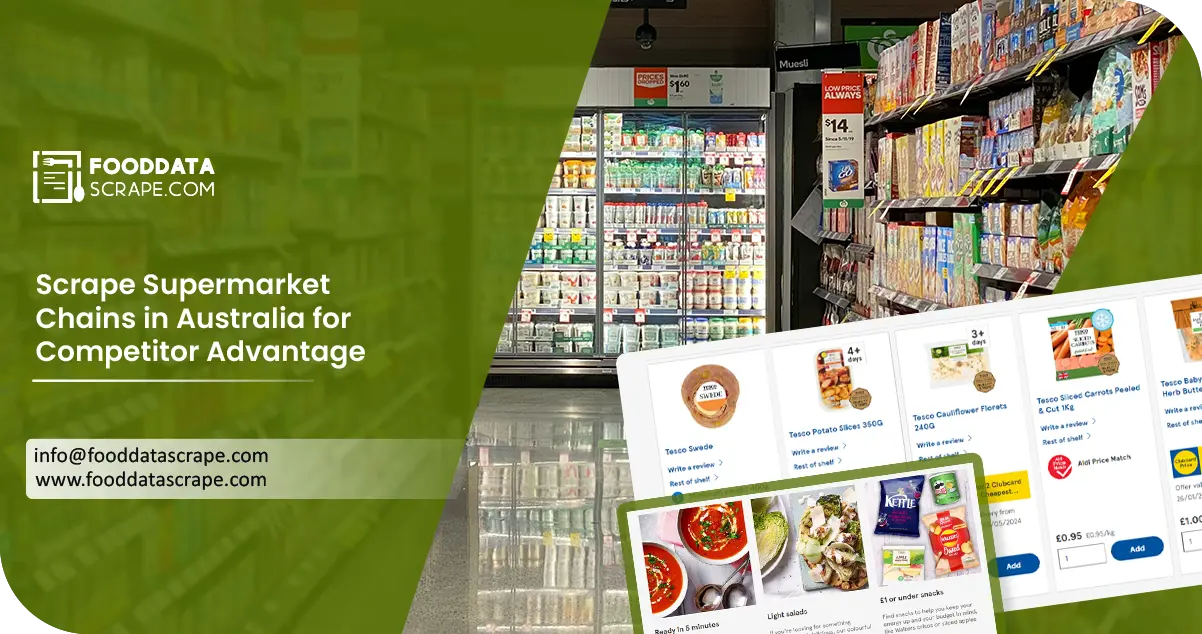

The Client

Our client is a prominent player in an online supermarket business. They leveraged our supermarket data scraping services to collect all supermarket data in Australia. The objective was to collect data from almost all locations and track their highest returns. By utilizing our expertise, the client was able to optimize their pricing strategies and market trends. It helped them make informed decisions and optimize their operations.

Key Challenges

Geographic Differences: Managing the website layout regarding regional variations and data presentations created significant challenges in getting uniform data across the locations.

Privacy Regulations: Personally identifiable information (PII) collected during scraping tasks had to be carefully managed to comply with strict data privacy regulations in Australia.

Data Quality Assurance: Ensuring the precision and entirety of collected data posed a challenge, thus requiring stringent validation and verification protocols.

Technical Constraints: Bandwidth restrictions and server capacity constraints limited the speed and scalability of the scraping process, necessitating optimization strategies for efficient data collection.

Key Solutions

Collaborative Efforts with Supermarket Chains: Establishing solid partnerships with supermarket chains allowed us to easily access structured data formats, streamlining the scraping process and maintaining data consistency.

Adherence to Compliance Measures: Implementing robust data privacy and security protocols ensured total compliance with Australian regulations. It safeguarded sensitive information during all scraping activities.

Efficient Handling of Dynamic Content: Our team developed specialized algorithms to handle dynamic content, allowing us to capture real-time updates accurately. It ensured the integrity of the scraped data.

Stringent Quality Control Procedures: To ensure the accuracy and reliability of the scraped data, we implemented an advanced quality control framework. It comprises both manual checks and automated validation routines before analysis and interpretation.

Methodologies Used

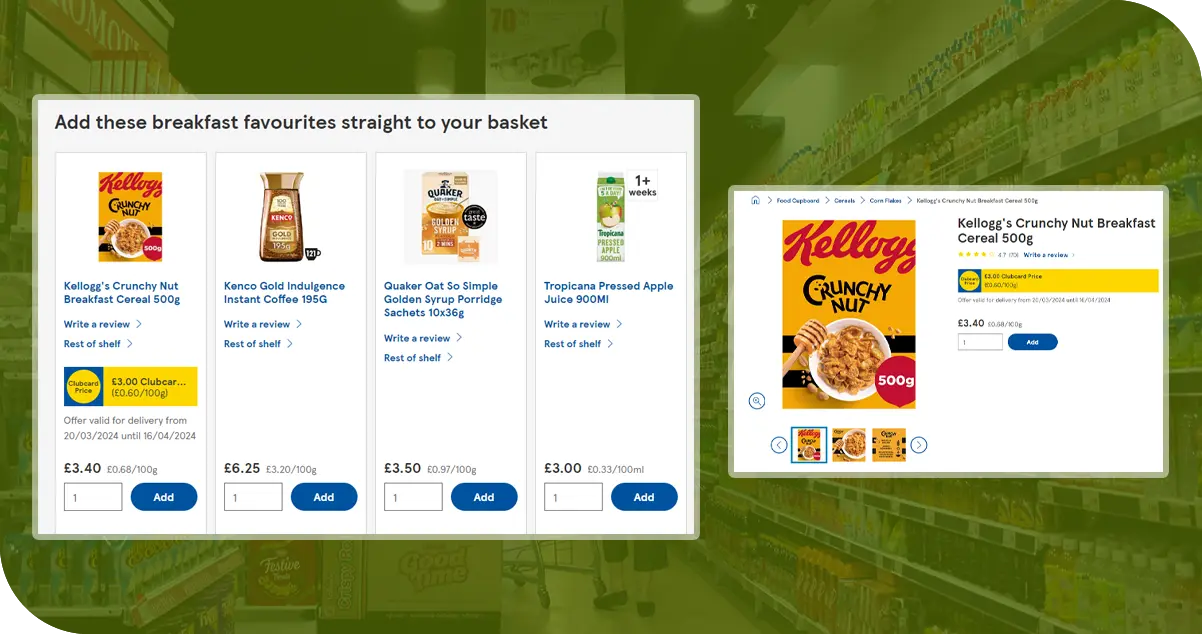

Targeted URL Identification: Our team meticulously identifies the URLs of each supermarket chain's website pages, focusing on store locators or product listings. It allows us to initiate the scraping process targeted and efficiently.

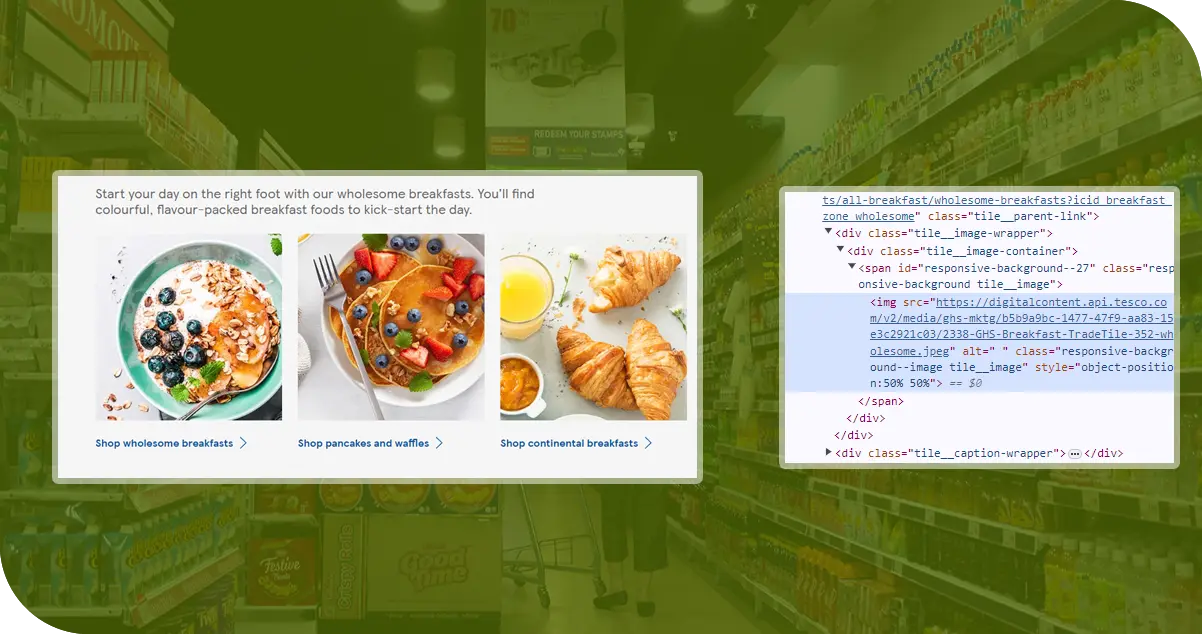

Web Scraping Tools: We used advanced scraping tools like BeautifulSoup and Scrapy to extract data from the HTML structure of supermarket websites. Our team also develops modified scripts to collect relevant data elements accurately.

Automated Scraping Processes: Our team efficiently gathers data from multiple supermarket websites by implementing automated scraping processes. It ensures consistency and accuracy across all locations.

Dynamic Content Handling: We utilize dynamic content handling techniques to collect real-time updates and steer through dynamic elements on supermarket websites. It assured the accuracy of scraped data.

Anti-Scraping Measures: We understand that certain supermarket websites possess anti-scraping measures. We strategically utilize IP rotation and CAPTCHA-solving services to overcome these challenges, ensuring hassle-free data extraction.

Advantages of Collecting Data Using Food Data Scrape

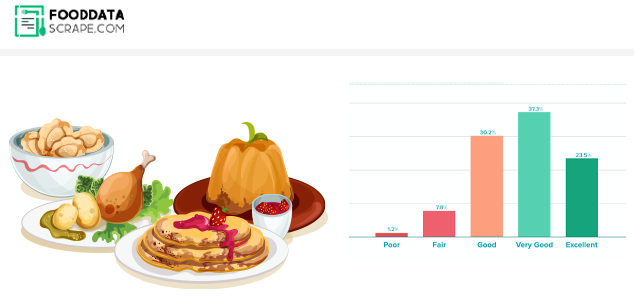

Detail Analysis: Food Data Scrape offers a detailed food industry analysis. It provides valuable insights into market trends, competitor strategies, and consumer choices.

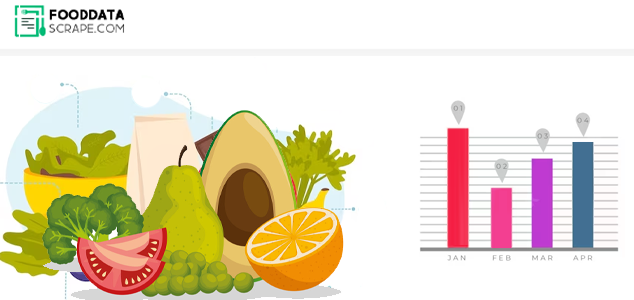

Timely Updates: The company offers timely updates on pricing, promotions, and availability through computerized scraping procedures. It helps businesses stay tuned to the latest market trends and make informed decisions based on data.

Strategic Advantage: Gathering data from various food-related sources equips businesses with strategic intelligence to comprehend market dynamics and measure their performance against competitors.

Tailored Solutions: The company provides customized data collection solutions for businesses. These solutions can be tailored to meet specific requirements and preferences. The goal is to provide actionable insights that are relevant to their operations.

Scalable Operations: The company's high scalability ensures easy handling of large amounts of data from various sources. It helps all businesses, regardless of size, leverage the benefits.

Efficient Cost Management: Food Data Scrape helps businesses cut costs by automating data collection, making the process more efficient and accurate. It reduces the need for manual collection, saving time and resources in the long run.

Final Outcomes: Our team effectively collected data from multiple supermarket chains across Australia. The scraped data enabled our client to gain valuable information for optimizing their operations. Through detailed analysis of pricing strategies, product availability, and competitor act, our client was able to reinforce their competitive advantage and double their profitability. Our customized data scraping solutions enabled them to make data-driven decisions and effectively achieve their business objectives.