Data is the key to transforming any fast-moving consumer goods (FMCG) industry. It provides information about effectiveness, pricing policies, and consumer trends that help make informed decisions. Considering supermarkets as the main distribution channels, FMCG data scraping and analysis becomes a precious source. Through web scraping techniques, businesses can glance at supermarket data and break up trends by gaining access to chains, regions, and individual store data.

By using supermarket data scraping in Australia to enhance FMCG understanding across regions, businesses can obtain data, including product lines, price deviation, marketing activities, and consumer feedback. Thus, companies fully grasp market situations and, most importantly, can pick out key trends and analyze ratings with other competitors.

Through supermarket data scraping, businesses are equipped with data-centered decisions, which then help them calibrate their pricing strategy and target specific consumer groups appropriately. Streamlining the process of obtaining valuable insights using web scraping technology assists companies in optimizing their strategies. It enables them to stay ahead of the fierce competition in the FMCG industry.

Reasons to Scrape Supermarket Data and Compare FMCG across Regions and Store

Scraping supermarket data to compare Fast-Moving Consumer Goods (FMCG) across regions and stores serves as a strategic importance for businesses for several reasons:

- Market Insights: Supermarket data scraping services offer a unique chance to acquire rich and accurate information related to product availability, price trends, and consumer preferences across geographic regions and between different supermarkets. This thorough insight into the market scenarios makes business people capable of seeing what is happening and deciding the market strategy they should use.

- Competitive Intelligence: Based on the FMCG product competition analysis, companies can obtain vital, solid intelligence in the market. They can identify market gaps, track competitors' competitive pricing strategies, and independently evaluate product performance, which enables them to keep a productive edge and modify their offerings as time passes.

- Optimized Pricing Strategies: Insight into pricing information exclusively available from supermarkets allows organizations to fine-tune their strategic pricing approaches. By comparing prices for products from various regions and shops, the company can alter its pricing to always be in accordance with market demand and remain competitive and profitable.

- Supply Chain Optimization: Supermarket data scraper accomplishes supply chain management tasks more visually by completely understanding product availability and inventory levels in different supermarket branches. It can help firms involve themselves in supply chain processes, avoid out-of-stock problems, and improve efficiency.

- Consumer Behavior Analysis: By studying the scraped data, businesses may gain knowledge that reflects consumers' behavior and propensities. Businesses can improve their marketing campaigns and product offerings based on consumers' buying habits, the most popular products, and consumer feedback. They can better align their efforts with actual consumer demands and inclinations.

- Identification of Growth Opportunities: Comparative research of FMCG products distributed regionally and locally across stores using supermarket data extraction assists business entities in identifying new trends and abandoned potential market opportunities. Companies with this knowledge can identify previously overlooked market segments, invent new products, and even revive the firm's business growth.

- Strategic Decision-Making: Supermarket data extraction supports data-driven choices from company executives to workers. The relevant data area, whether it is moving into new markets, seeking to optimize product assortments, or even sealing supplier contracts, provides businesses with actionable intelligence. Real-time analytics provide them with a competitive edge.

Moreover, comparing supermarket data for FMCG across regions and stores is essential. It empowers business professionals with actionable insights that further help in competitive advantage, inform strategic planning, and fuel growth.

How to Initiate Supermarket Chains Data Scraping?

Australia has supermarket chains with different tracks and ownership. These chains are the players shaping this vital sector. Those in this group are Woolworths, Coles, Aldi, and smaller regional distributors. During scraping procedures, it is necessary to determine the required chains and their locations.

Successful web scraping steps include planning carefully and execution. Here's a systematic approach:

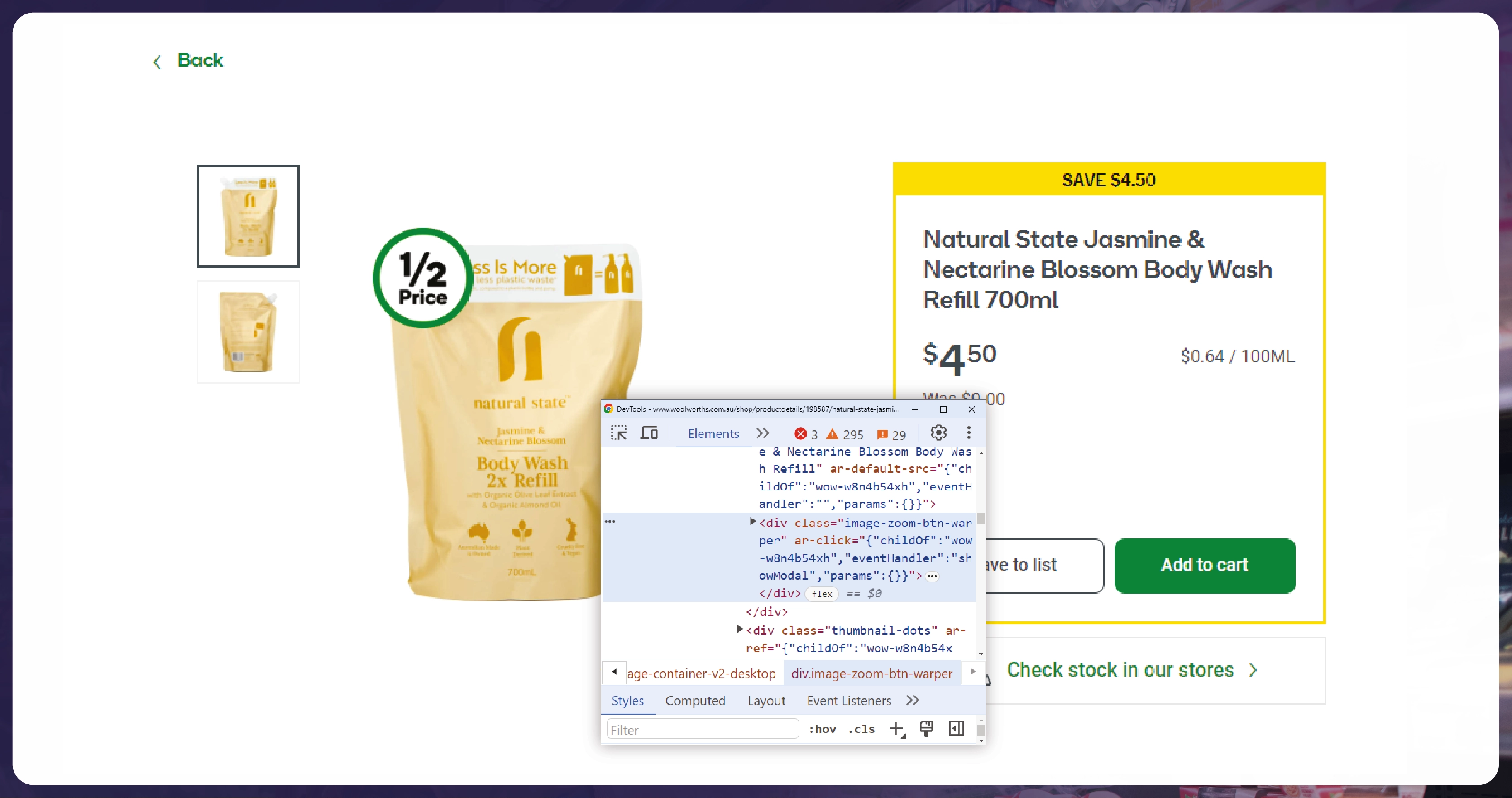

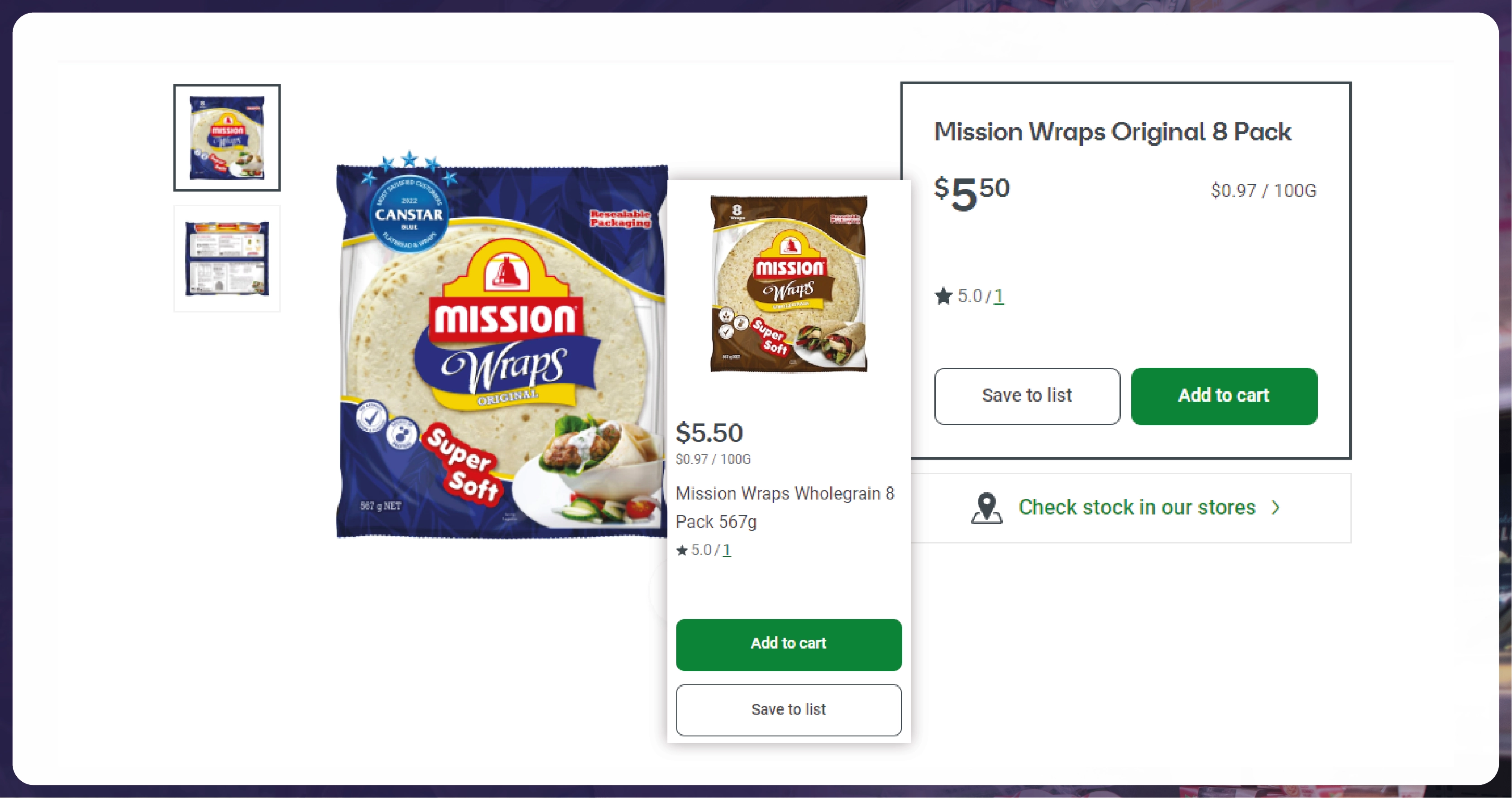

- Defining Data Points: Decide what aspects of data you will extract. It includes product titles, prices, descriptions, availability, promotional offers, discounts, and customers' reviews.

- Identifying Data Sources: Visit both supermarkets' websites for pages with relevant information you can access. For example, this layout could comprise product markups, a category page, or a sales section.

- Building Scraping Scripts: Develop a set of custom scraping scripts specific to the way each supermarket's available information is structured. Beautiful soup or Scrapy of Python, as usual, serves better here for this function. Such scripts often mimic humans' behavior in the search for data by using a fast and easy protocol in a respectful and not-too-detectable way.

- Handling Dynamic Content: Online supermarket interfaces often have asynchronous content-loading mechanisms that call for AJAX handling or automated browsers with Selenium capabilities.

- Implementing Robust Error Handling: Address possible errors during the process, possibly due to a slow internet connection, page structural changes, or CAPTCHA verification.

- Respecting Website Policies: Follow the scraping practices closely and do not violate the website's terms of service. Data privacy and security should be prioritized by limiting the number of requests generated by servers and not overloading them with excessive data.

Analyzing Scraped Data

Analyzing the scraped data requires the following:

Data Cleansing and Normalization:Pre-treat the data before analyzing it. The process necessity includes removing inconsistencies, handling missing values, and standardizing formats, among other things. It clears confusion and possible preconceptions from utilizing these data in different outlets.

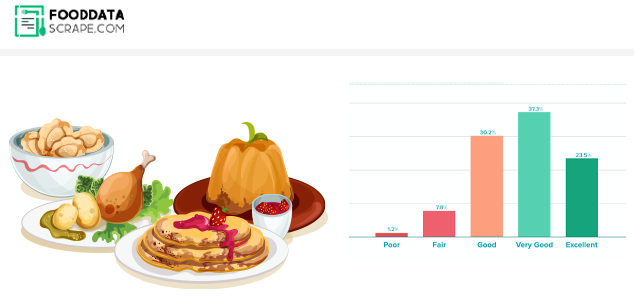

Comparative Analysis: Analyze widely used FMCG products offered in different supermarket chains, throughout given regions, and individual stores, assessing various parameters like pricing, assortment, brand presence, and customer feedback. Identify tendencies, risks, and competitive/non-competitive situations.

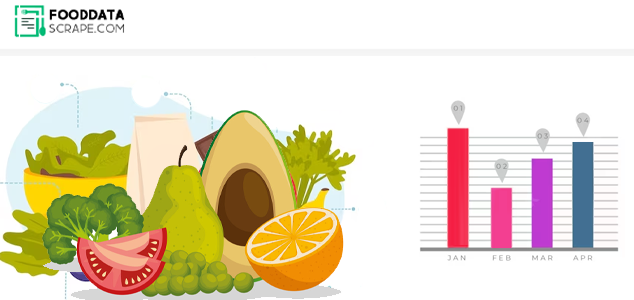

Identifying Market Trends: Monitor the trends of customer preferences, product demand, and retail pricing strategies that are on the rise. Conduct seasonal comparisons, regional disparity interpretations, and the effect of external components (such as economic conditions and public health crises) on the industry's sales and distribution.

Visualizing Insights

Visual representations from scraped data make the explanations of inferences more readable and more understandable. Utilize data visualization tools and techniques to create compelling visuals, such as:

Interactive Dashboards:Use the interactive features of platforms like Tableau and Power BI to create animated dashboards that visualize leading indicators, tendencies, and comparisons.

Charts and Graphs: Use bar charts, line graphs, pie charts, and heatmaps to represent how the products are distributed, their pricing behavior, variations in market shares, and geographical distinctions.

Geospatial Analysis: Establish the region to illustrate local variations across various indicators, such as product distribution, pricing structures, and consumer patterns. Combine this information with demographic or economic indicators to sharpen the analysis and gain precise insights into market dynamics.

Competitor Benchmarking: Compare each supermarket in the market against competitors to check the brand position, pricing levels, effectiveness of the promotional campaign, and customer satisfaction. Take action to address the shortcomings and take advantage of certain strategic openings.

Conclusion: Supermarket data scraping, market research, and price monitoring offer a tool that allows for uncovering hidden opportunities in FMCG markets all across Australia. Through deeply digging into the information, data mining, and data visualization methodologies, which are the best ways to enable real-time interaction with the market, organizations can capture the business opportunity and quickly connect to changing market circumstances. Nevertheless, though web scraping is ethical, respecting data privacy regulations and website policies and developing adequate technological usage to extract valuable information from online data is vital.