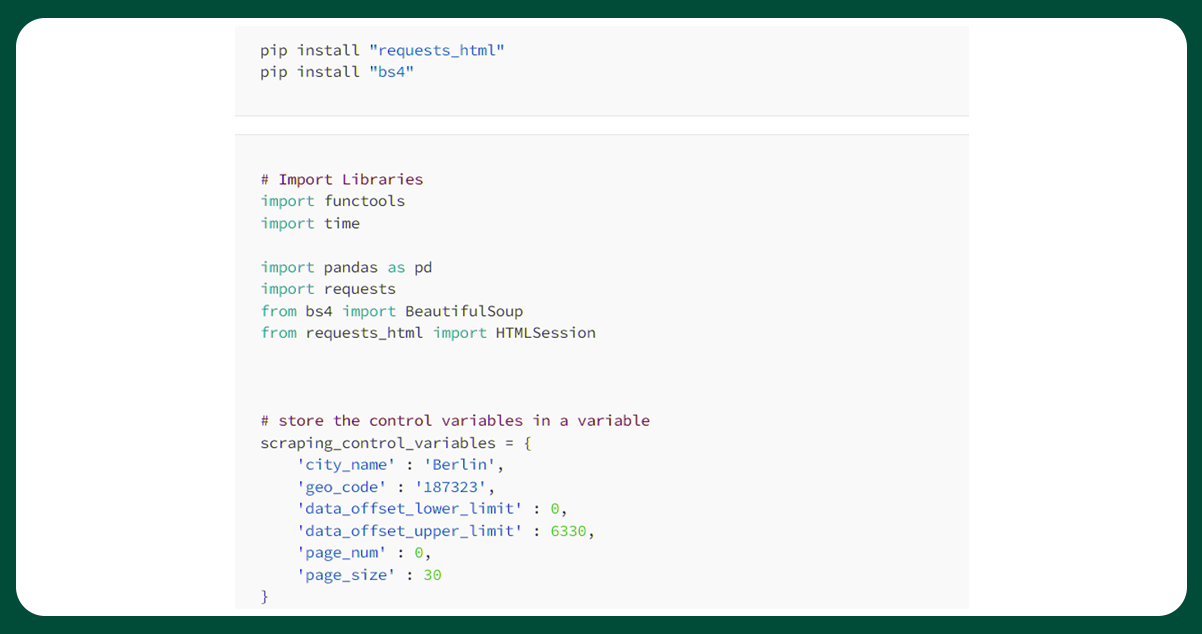

We will use BeautifulSoups, Requests, Seaborn, and Pandas to scrape TripAdvisor restaurant listing data.

Web Scraping Using Python: Extracting data like product prices, ratings, and other information is accessible using our restaurant data scraping services. The scraped data is helpful for multiple purposes like research, data analysis, data science, and business intelligence. In Python, web scraping uses Scrapy, Beautiful Soup, and Requests. It makes it easy to retrieve and parse data from web pages.

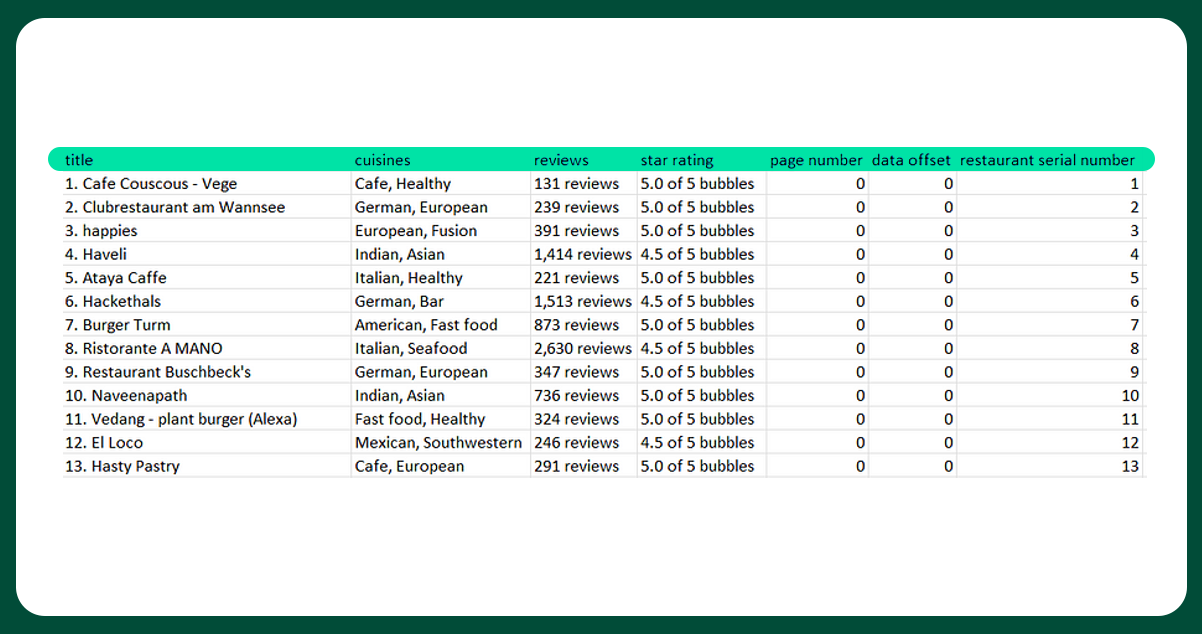

This blog emphasizes scraping restaurant data from TripAdvisor using Python from any city in any country. It then performs Exploratory Data Analysis from the scraped data. The scraped restaurant data will contain the following:

- Restaurant Name

- Total Number of Reviews

- Star Rating

- Cuisines

- Restaurant Types

- Photos

- Contact Number

Other helpful information is the restaurant serial number, page number, and data offset.

Input Variable

In this blog, we have selected Berlin, Germany. Taking the example of all restaurants in Bangalore, Karnataka, the link that we will get will appear like this:

297628 is the geo-code in this link, and Bengaluru_Bangalore_District_Karnataka is the city name. On TripAdvisor, the number of restaurants in Bengaluru is 11,127. The input parameters are:

- City Name

- Geo Code

- Upper Data Offset

After choosing a city and its geo-code, we will proceed with the script. The first step involves importing libraries. The next step is to define control variables. As here we will scrape restaurant data from Berlin, we will define the variables accordingly.

This script uses a total of 10 functions.

- Get_card

- Get_url

- Parse_tripadvisor

- Get_soup_content

- Scrape_star_ratings

- Get_restaurant_data_from_card

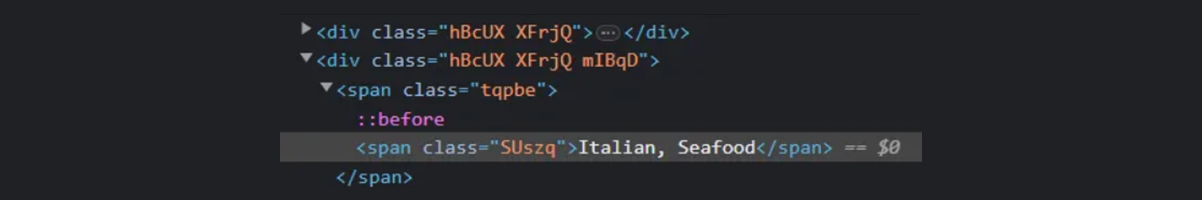

- Scrape_cuisines

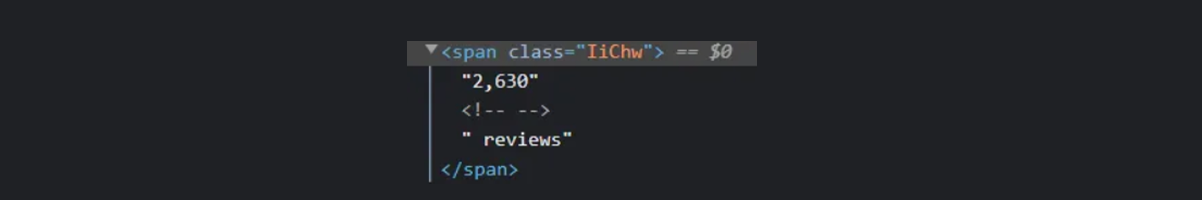

- Scrape_reviews

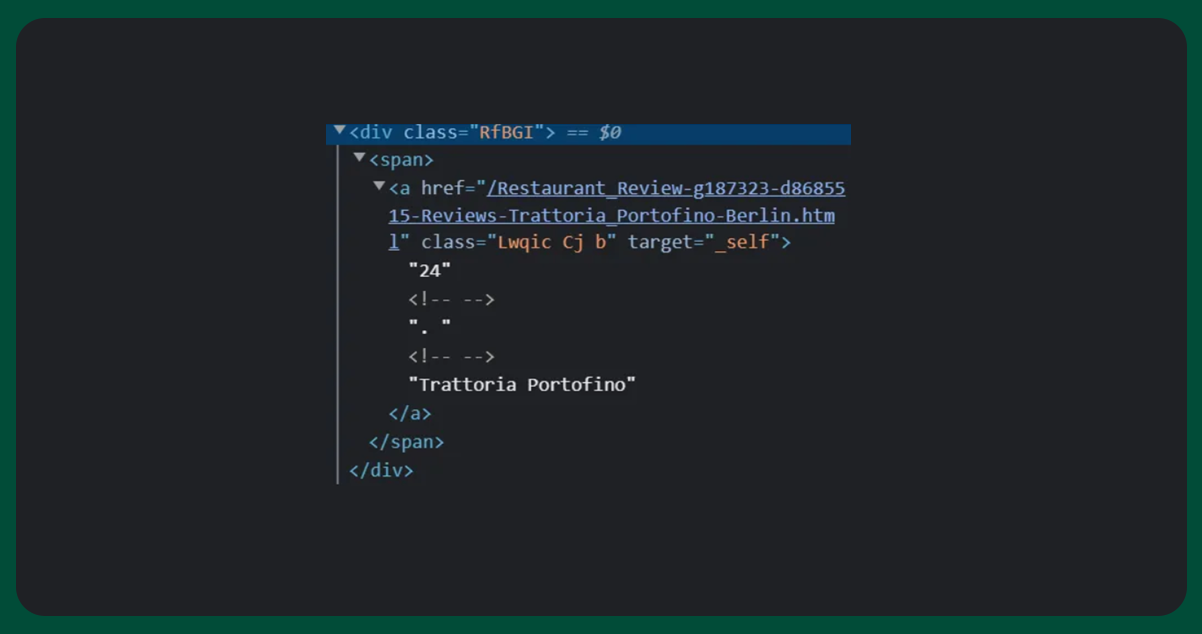

- Scrape_title

- Save_to_csv

We will go through each function one by one

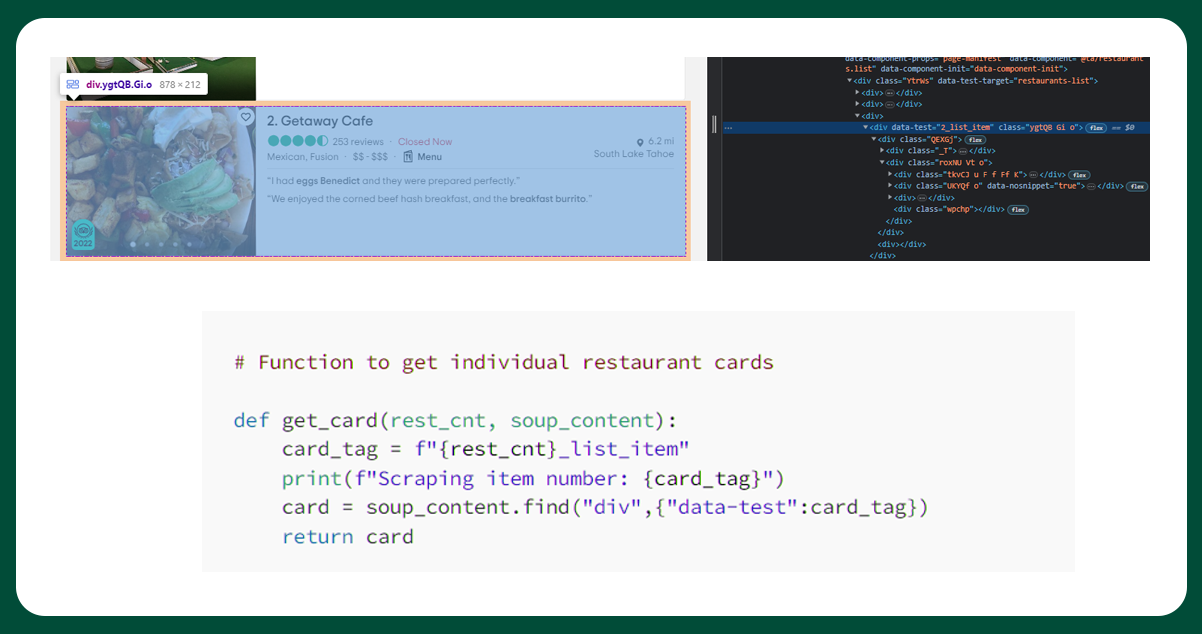

Get_card

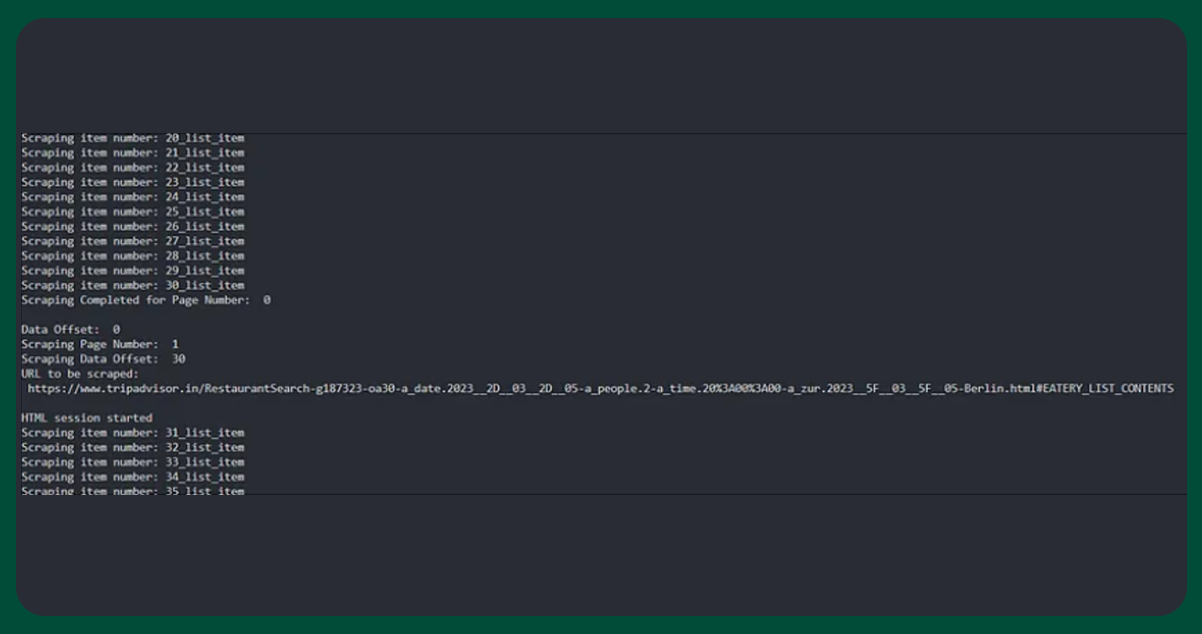

This function will help retrieve all restaurant cards depending on the restaurant serial number or count. The card tags follow the following patterns: 1_list_item, 2_list_item, 3_list_item, etc.

Get_url

It obtains the geo-code, city name, data offset, and inputs and generates a different URL for scraping each page. The data offset is a multiple of 30, and the URL follows the below patterns:

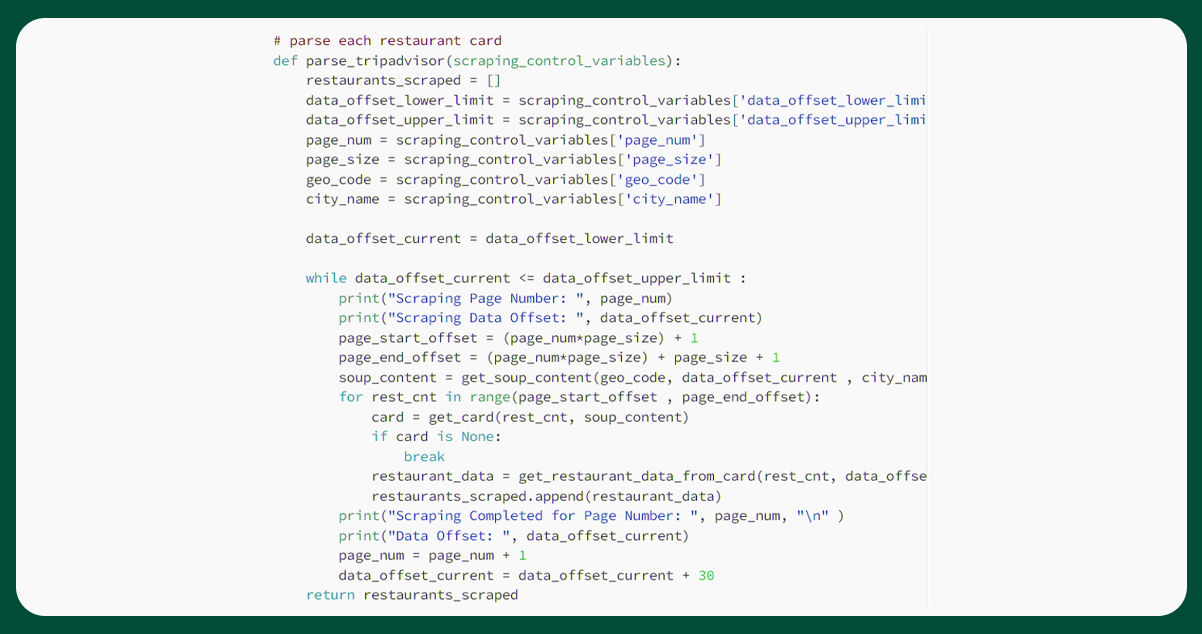

Parse_tripadvisor

The role of this function is to define control variables as input. It is known to be the most important function in the script. The variables data_offset_lower_limit, page_num, page_size, city_name, geo_code, and data_offset_upper_limit obtain their values from scraping_control_variables dictionary. However, for each page in the next loop, the value of data_offset_current is set to the value of data_offset_lower_limit.

The while loop will run until the last page gets scraped. The page_start_offset and page _end_offset will take the values like (0,31), (31, 61), (61, 91), etc. The function get_restaurant_data_from_card will scrape restaurant details and add them to the empty list. As we are still determining if the page may contain fewer than 30 restaurants, we will include if condition within the loop.

Get_soup_content

The soup object plays a significant role in accessing vital information like cuisines, titles, ratings, etc. It takes the city name, data offset, geo-code, and input to call the function get_url. Using the URL obtained, it creates a response object. Once the HTML gets accessible, parse it and load it into a BS4 structure.

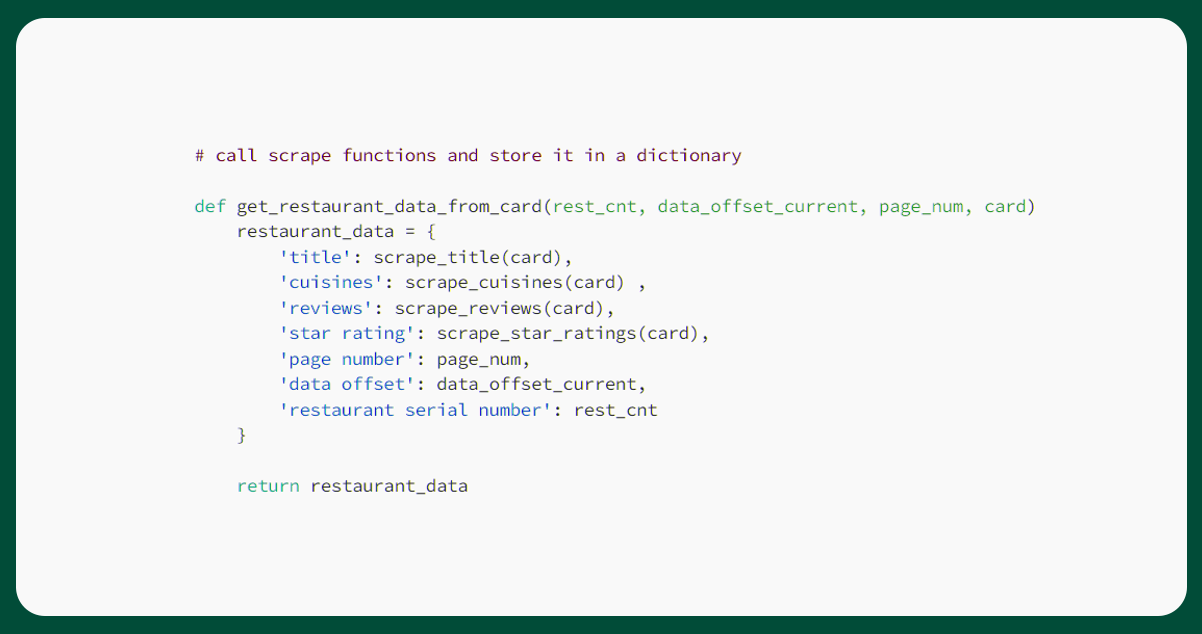

Get_restaurant_data_from_card

This function will input restaurant count, page number, current data, offset, and card number and will individually scrape functions to get restaurant details.

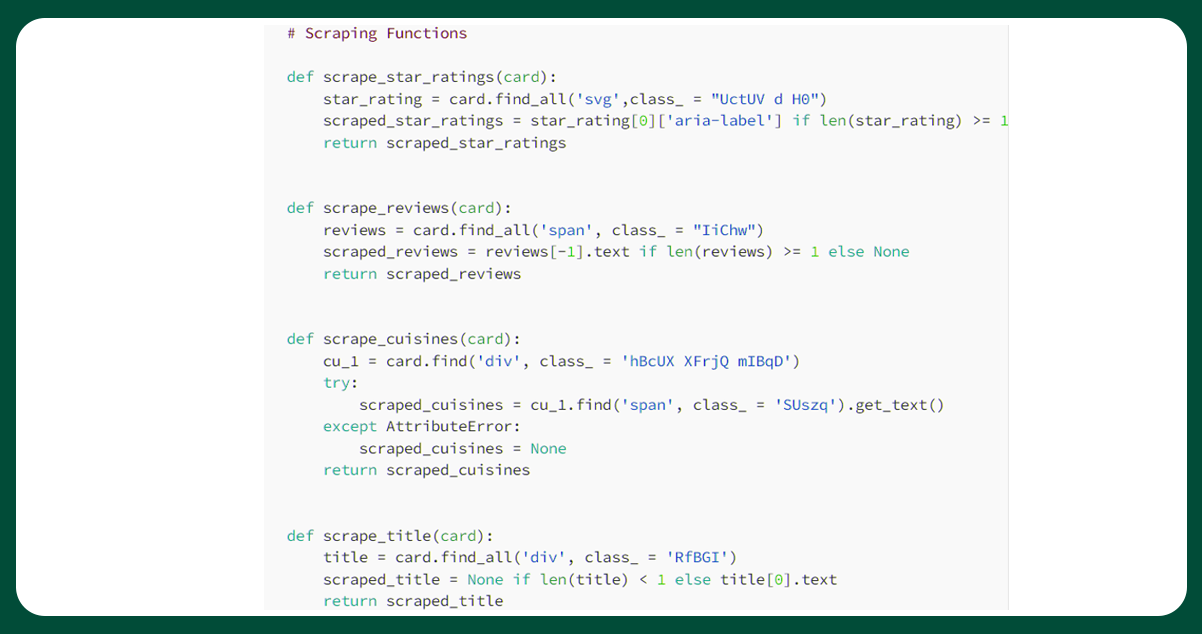

Scraping Functions to Get Restaurant Details

The below function is input containing all the information related to a specific restaurant.

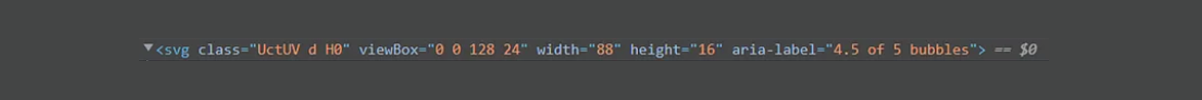

- Scrape_star_ratings: It helps get the restaurant's stars or customer ratings.

- Scrape_cuisines: It gives detailed information on the types of cuisines restaurants offer.

- Scrape_reviews: It provides complete reviews of the restaurant.

- Scrape_title: It provides the name of the restaurant.

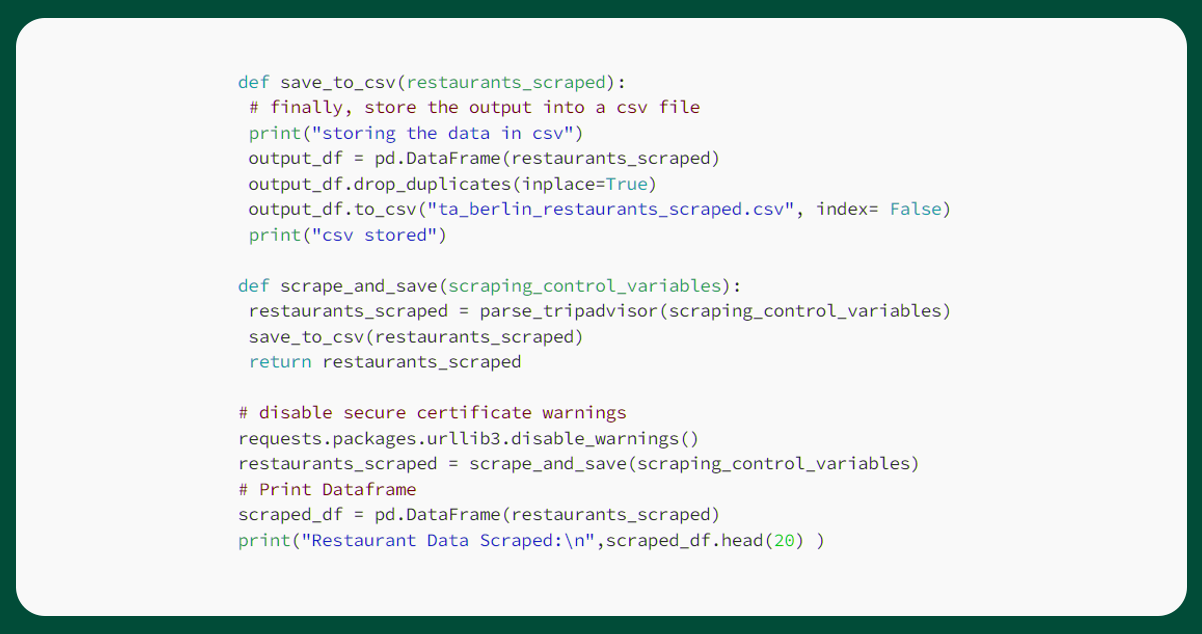

Saving the scraped file as a CSV

In this step, we will save the data frame as a CSV. It is helpful for any data analysis or data science projects.

- Scrape_star_ratings: It helps get the restaurant's stars or customer ratings.

- Scrape_cuisines: It gives detailed information on the types of cuisines restaurants offer.

- Scrape_reviews: It provides complete reviews of the restaurant.

- Scrape_title: It provides the name of the restaurant.

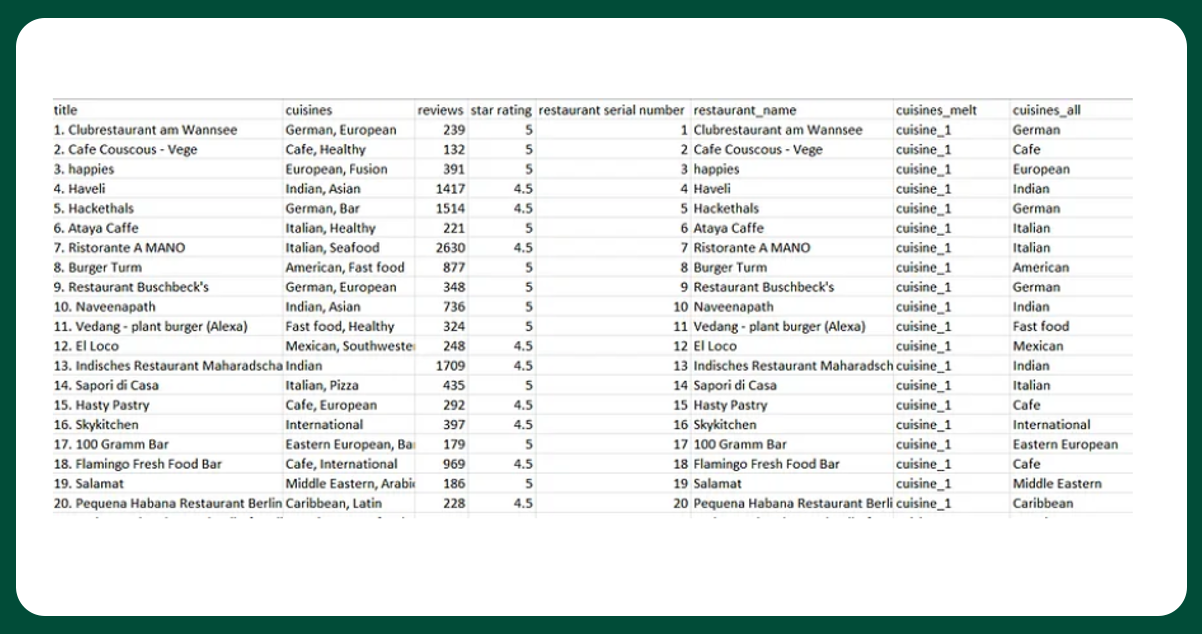

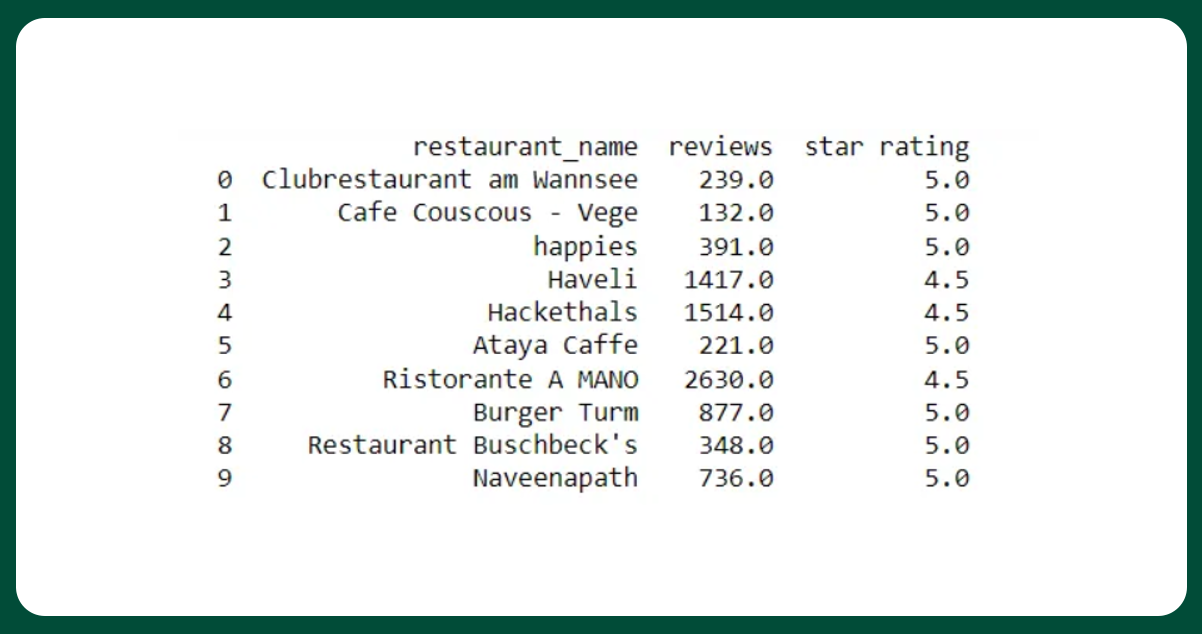

Exploratory Data Analysis

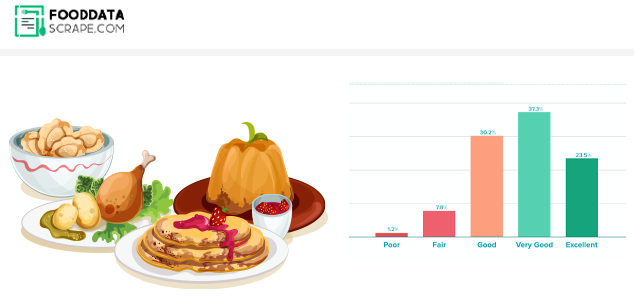

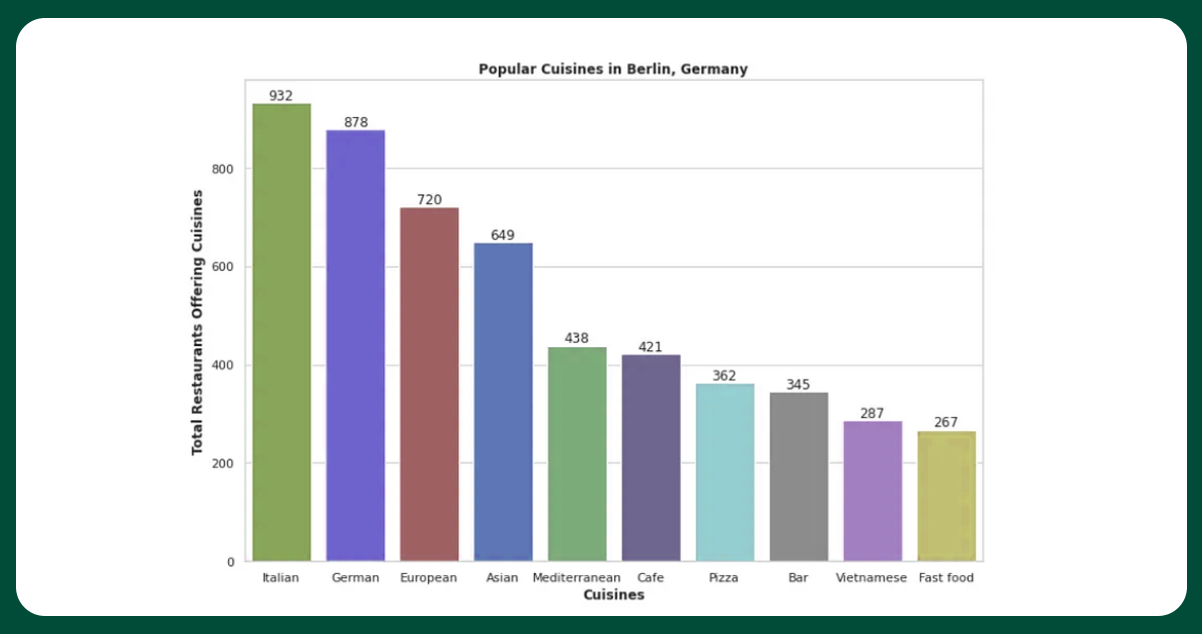

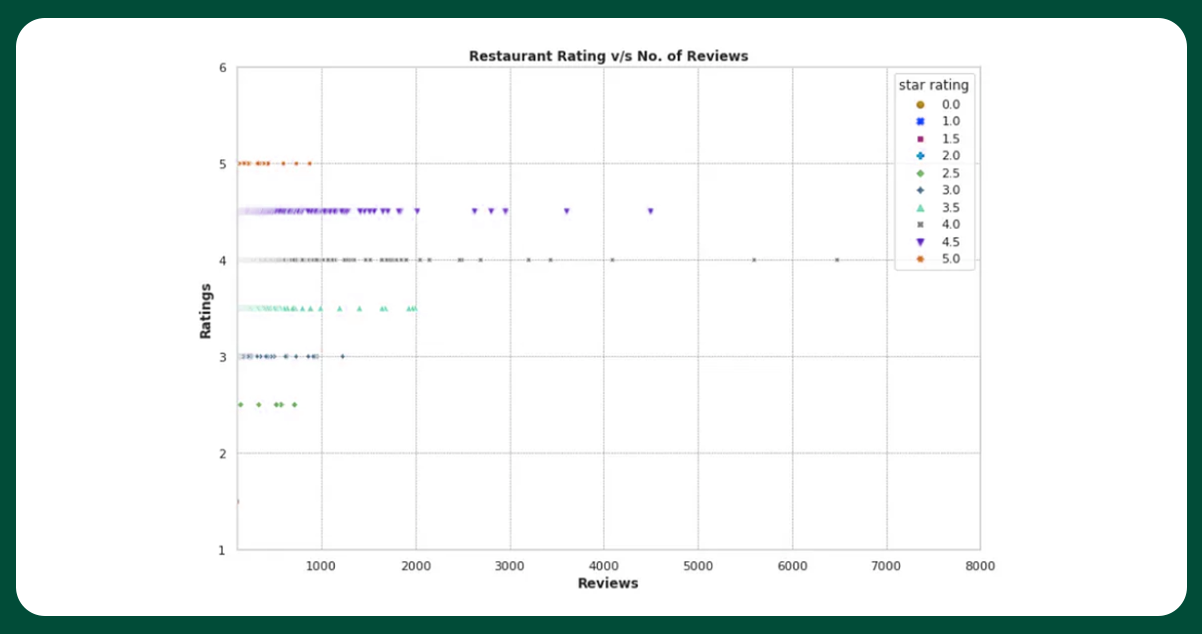

We will now perform some exploratory data analysis on the scraped data. We will plot the below-, mentioned analysis using Seaborn.

- Top 10 most popular cuisine in Berlin, Germany

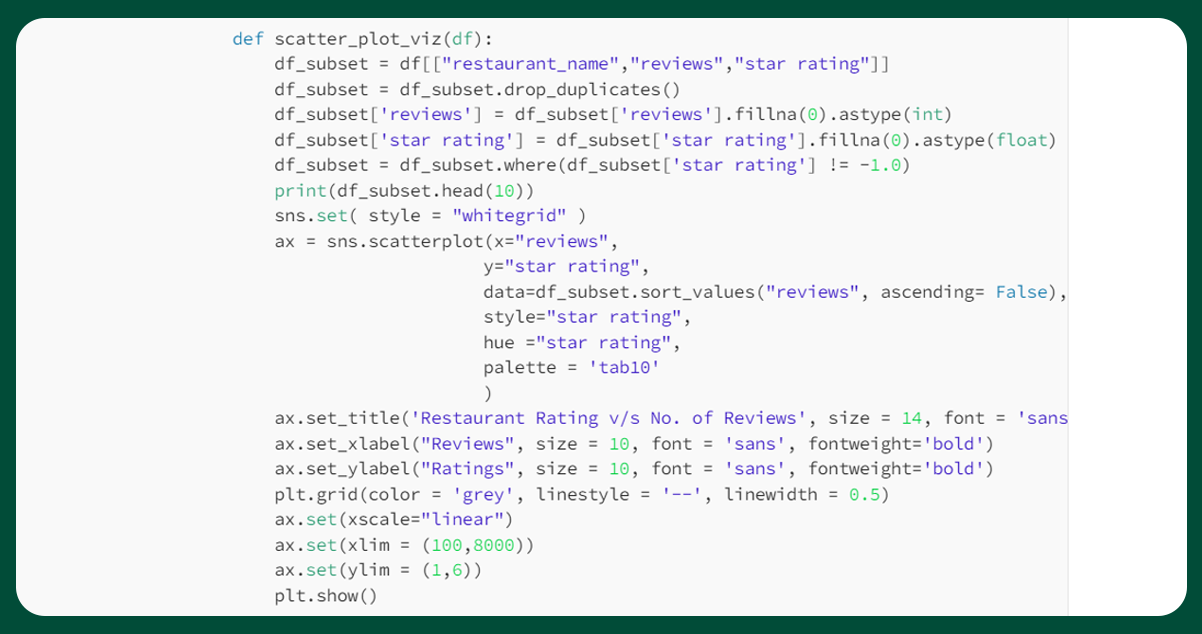

- No, of reviews versus start ratings of restaurants in Berlin, Germany

The function clean_dataframe performs cleaning of the scraped data frame. It comprises segregating the serial number from the restaurant name, removing the unnecessary columns, segregating cuisines, and removing noise from specific columns.

The function scatter_plot_viz creates a bar plot of famous cuisines in Berlin with the help of Seaborn. Here, we will refer restaurants with high ratings and many reviews. Monitoring the relation between ratings and reviews gives an option for the best places to eat in Berlin.

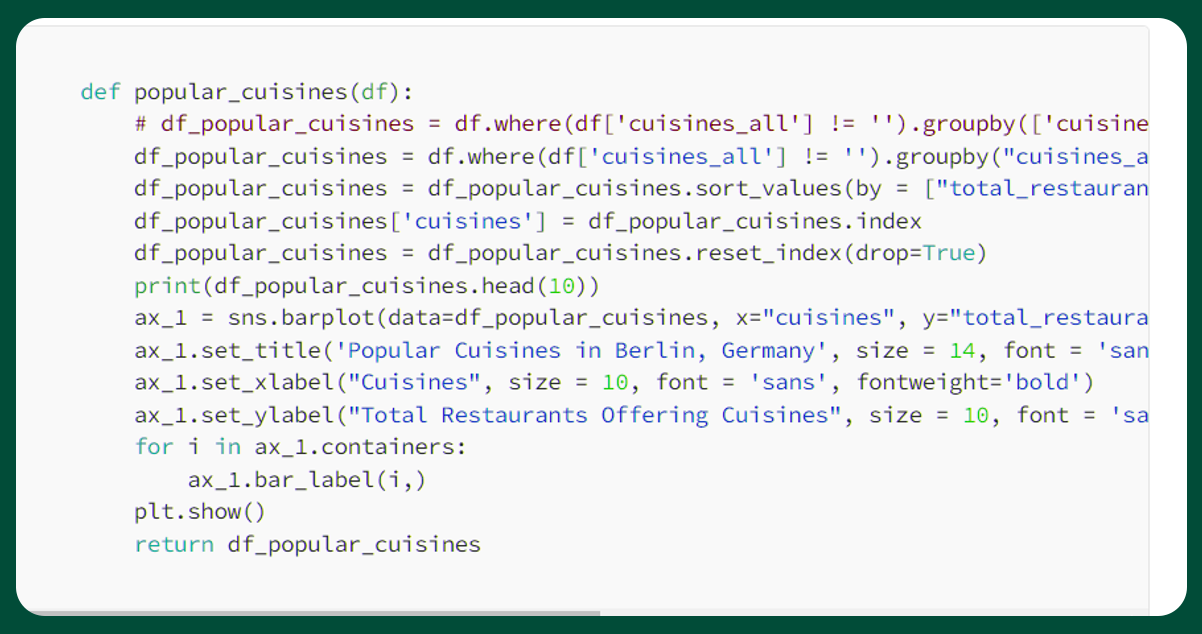

The function popular_cuisines create a bar plot for trendy cuisines by combining the datasets by cuisines count. We will split each cuisine by a comma separator to get this count and then mention it in the individual rows.

For more information, contact Food Data Scrape now! You can also reach us for all your Food Data Scraping service and Food Mobile App Data Scraping requirements.

Get in touch

We will Catch You as early as we recevie the massage

Trusted by Experts in the Food, Grocery, and Liquor Industry