Yelp is a specialized online platform for searching businesses in your region. Reviews are a helpful resource of data since people discuss their interactions with that business there. Feedback from customers may assist in determining and emphasizing benefits and issues for the company's future growth.

Due to the availability of the internet, we can access a broad range of sites where individuals are willing to discuss their experiences with some other services and businesses. We may take advantage of this opportunity to collect essential data and produce invaluable insights.

By collecting all of those assessments, we may collect a large chunk of data from primary and secondary information sources, analyze them, and recommend areas of concern. Python includes libraries that enable these tasks extremely easy. We can utilize the proposals module for data extraction since it works well and is simple.

Continue reading the blog for more profound knowledge on Scraping Yelp Data And Other Restaurant Reviews.

We can quickly learn the layout of a site by evaluating it with a browser window. After examining the style of the Yelp site, below is a checklist of possible selected variables of data that we need to collect:

- Name of the Reviewer

- Comment

- Star Raking

- Name Of the Restaurant

- Date

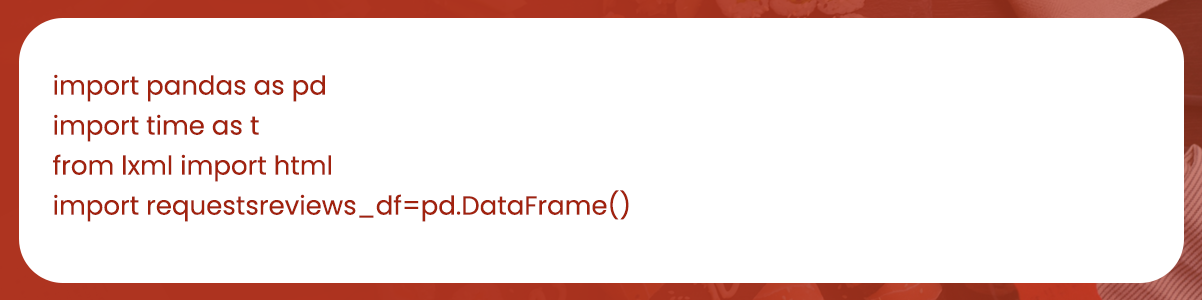

Obtaining documents through the internet becomes more accessible with the requests modules. You may download the requested modules by doing the necessary stages:

Code

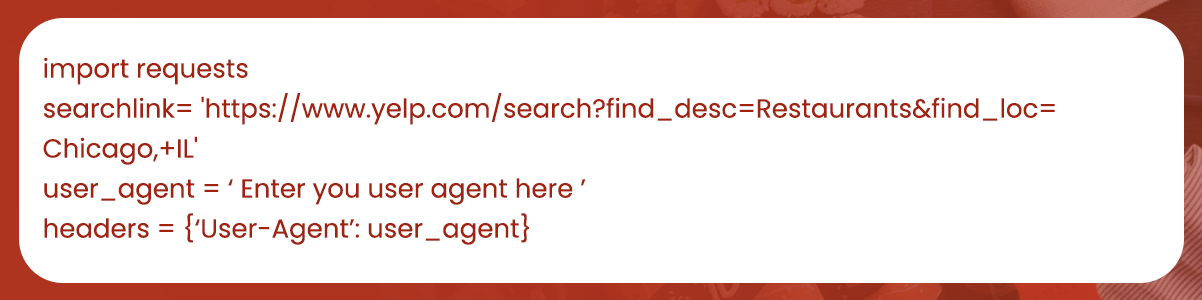

pip install requestsTo get started, go through the Yelp site and search "restaurants near me" within the IL region of Chicago. Next, after importing all required frameworks, we'll create a Panda DataSet.

Code

You can download the HTML document via request. get()

Code

Below is the User agent

You can copy and paste the URL to collect restaurant evaluations from any other site within the same feedback website. Providing a hyperlink is all that is required.

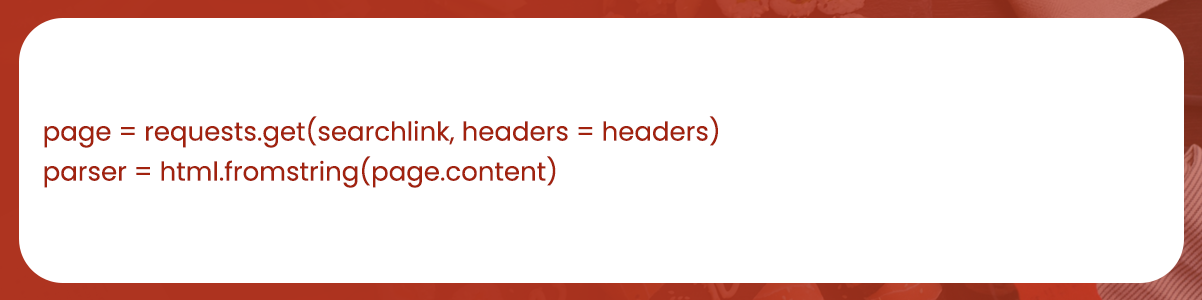

You can install the HTML document with Requests. get(). We must therefore browse the webpage for links to relevant restaurants.

Code

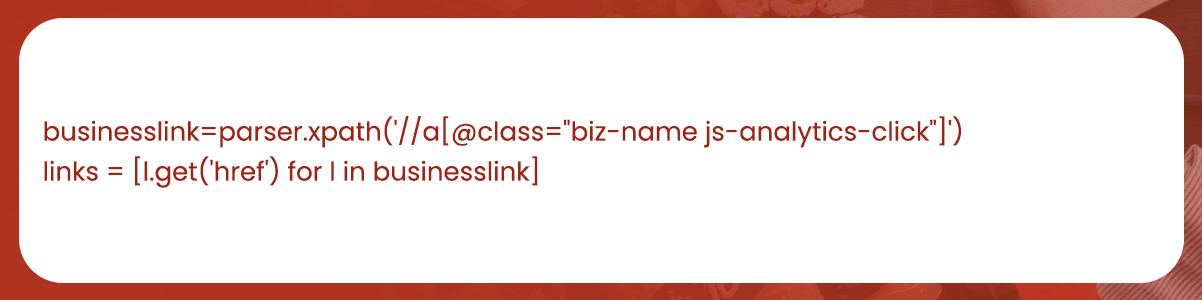

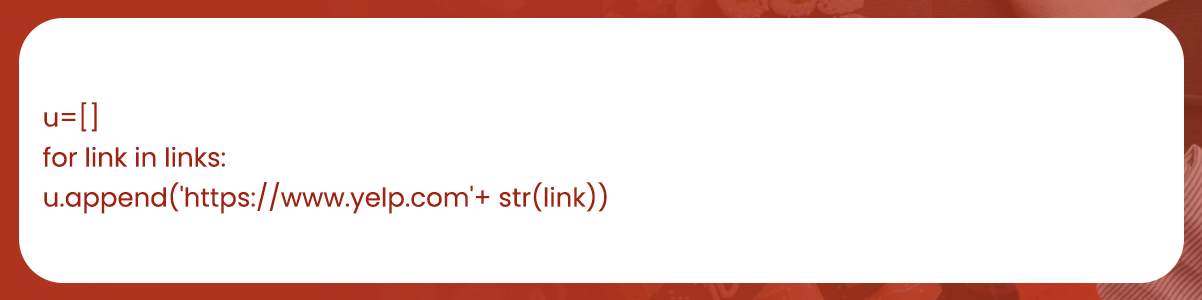

Once you install these links, we must provide the hostname.

Code

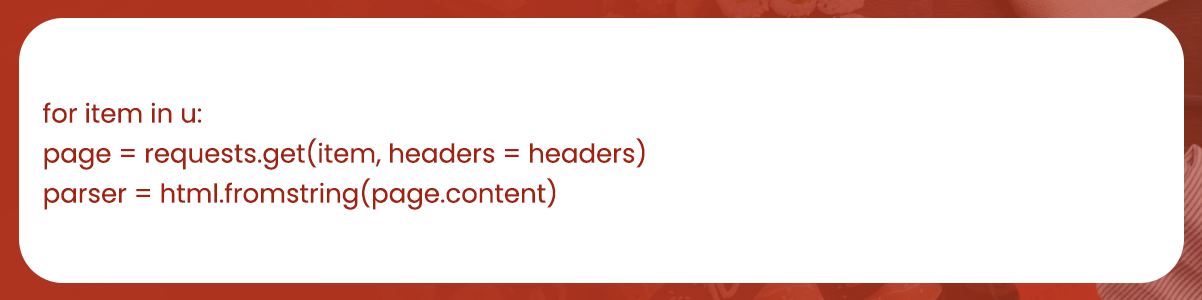

Many restaurant names are now available on the initial page; there are 30 results per page. Now let us look at every single page one at a time and get their input.

Code

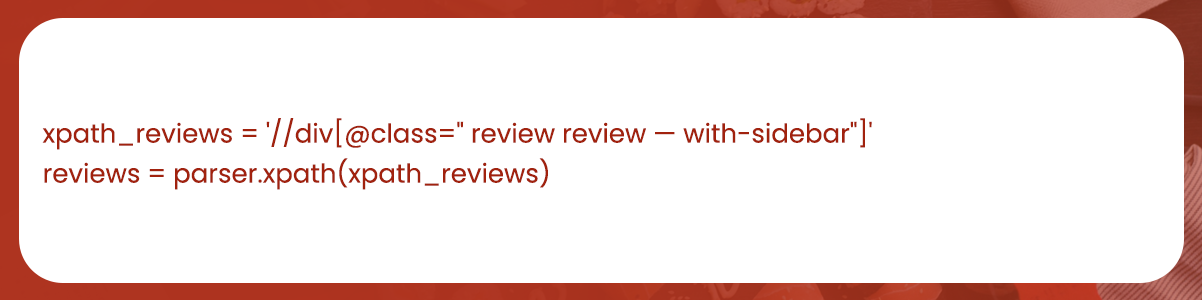

You can include the reviews in a div with the class "review review — with-sidebar." Let's proceed and extract every single div.

Code

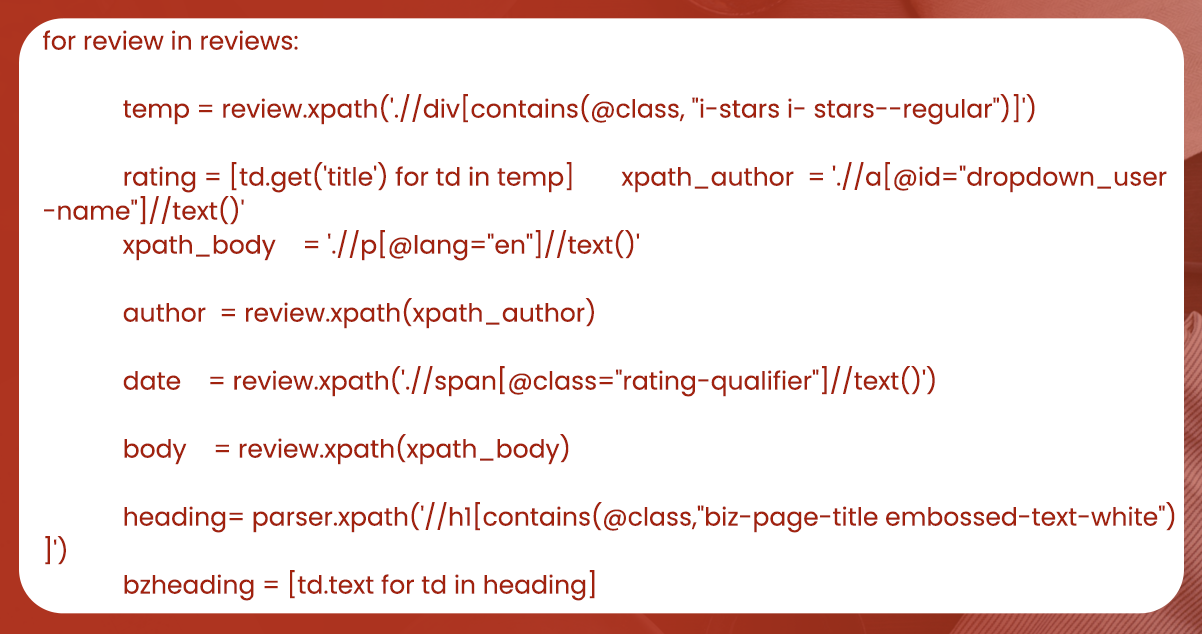

The elements we want to collect are the creator's name, date, name of the restaurant, post text, and the star ranking of every review.

Code

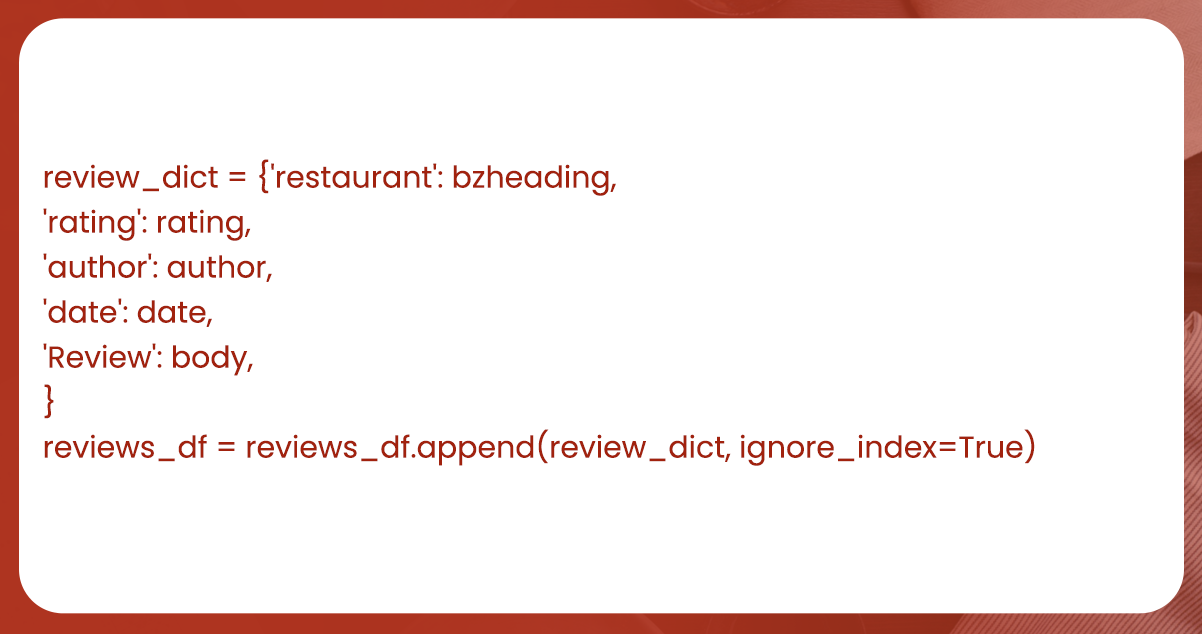

We'll generate a dictionary for each of these things, which you can save in a pandas dataset.

Code

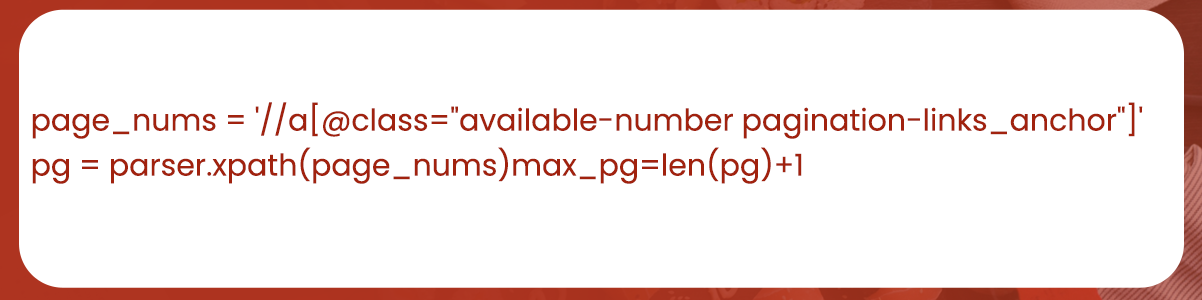

On one portal, users can now view all of the comments. Looping through all web pages by figuring out the highest reference number is possible. You can have the most recent page number in an a> element with the datatype "available-number pagination-links anchor."

Code

Here, we'll use the above script to extract a maximum of 23,869 ratings for around 450 restaurants, with 20 to 60 comments per restaurant.

Let's install a Jupyter program and perform textual extraction and consumer sentiment analyses.

Firstly, gather the necessary libraries.

Code

You can save the information in a file called all.csv.

Code

data=pd.read_csv(‘all.csv’)

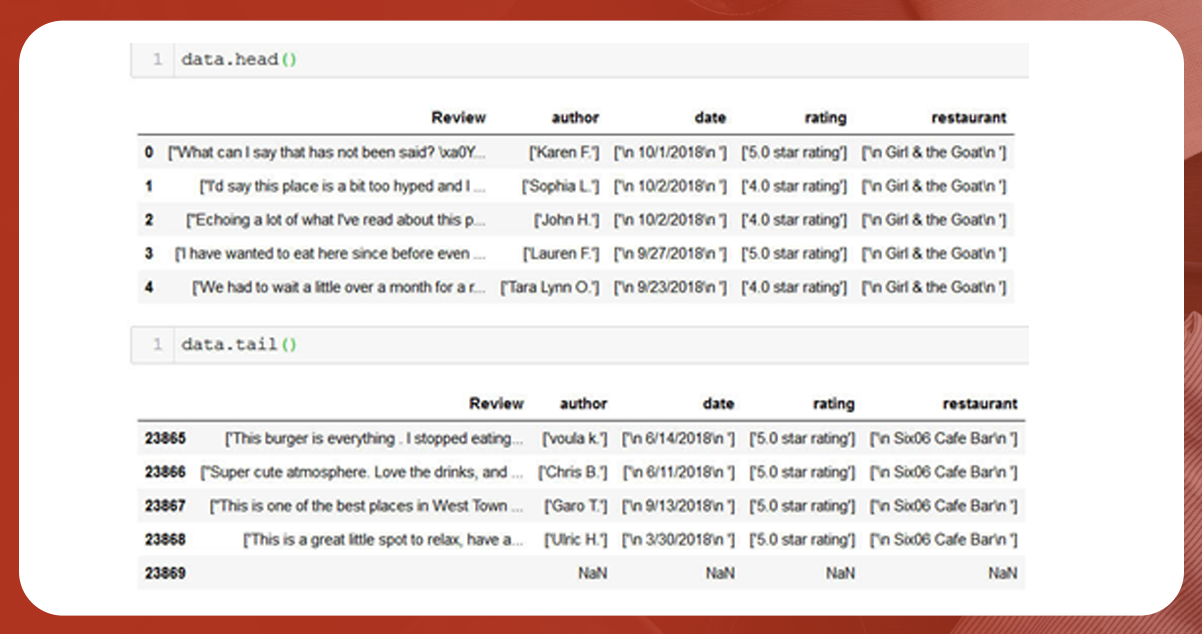

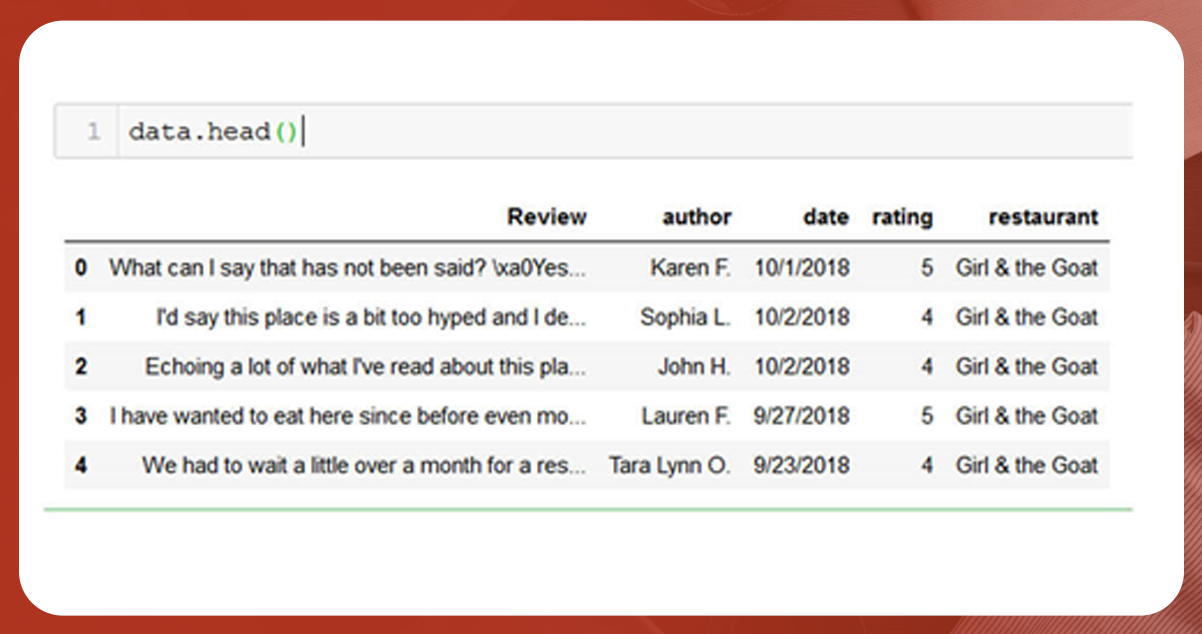

Let us analyze the data set given below:

We receive 23,869 records from 5 columns. The information, as can be shown, needs formatting. You should remove all unnecessary icons, tags, and gaps. Additionally, you can also observe a few values like Null/NaN.

Delete all Null/NaN values from the data structure.

Code

data.dropna()With string slicing, remove the unnecessary characters and spaces.

Image

Obtaining more data

Image

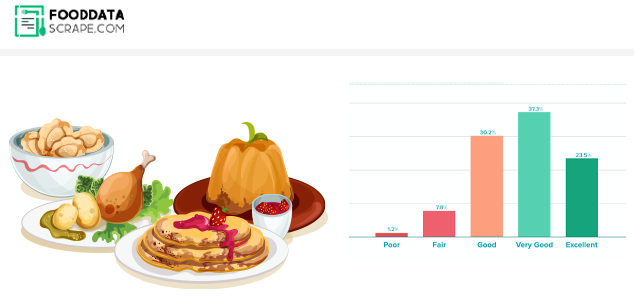

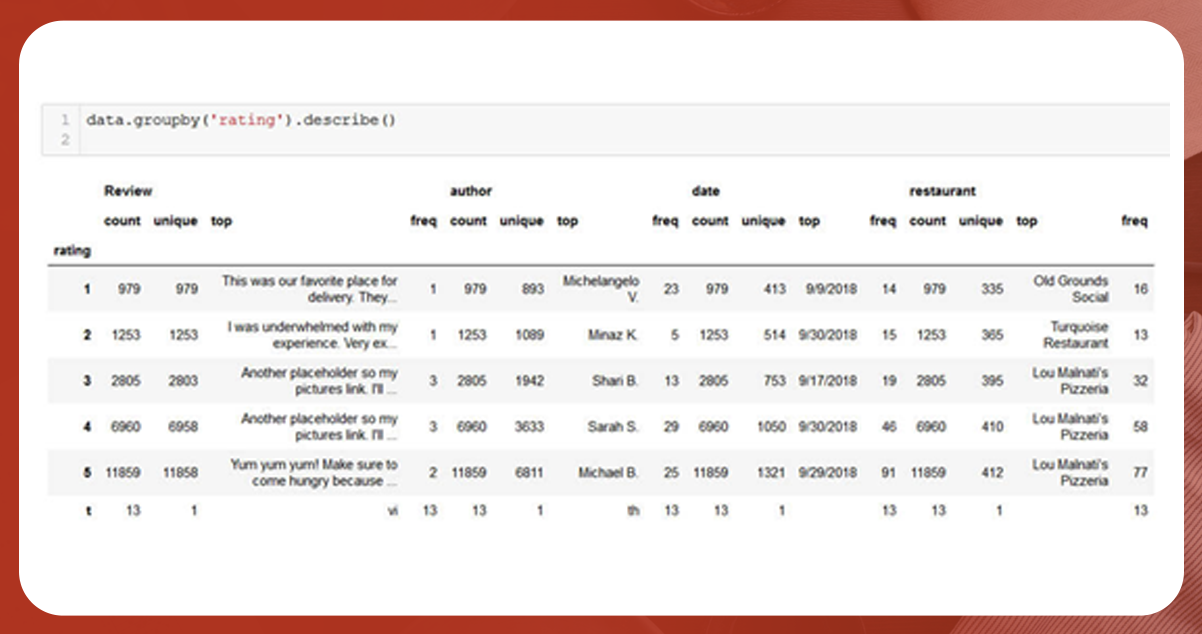

11,859 reviewers have rated it as a 5-star experience, while 979 have rated it as a 1-star. A 't' grade is rarely unclear in records, however. You should remove these documents.

Code

data.drop(data[data.rating=='t'].index , inplace =True)

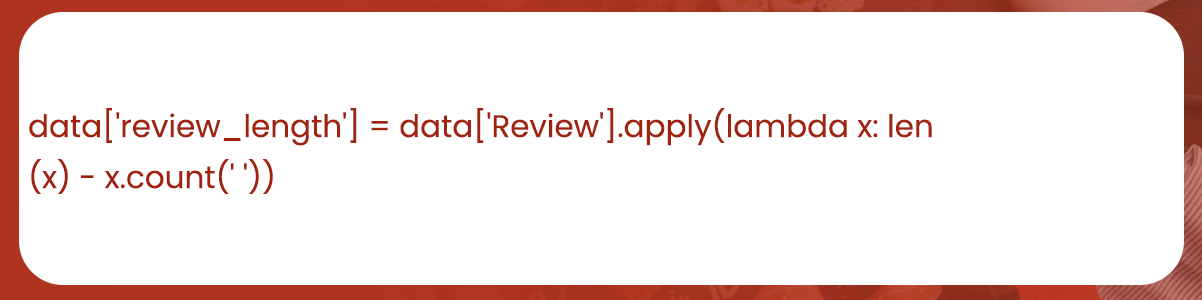

We may create a new function called review _length to understand the data better. These columns will provide the number of words in each post, and you can eliminate the spaces between review.

Code

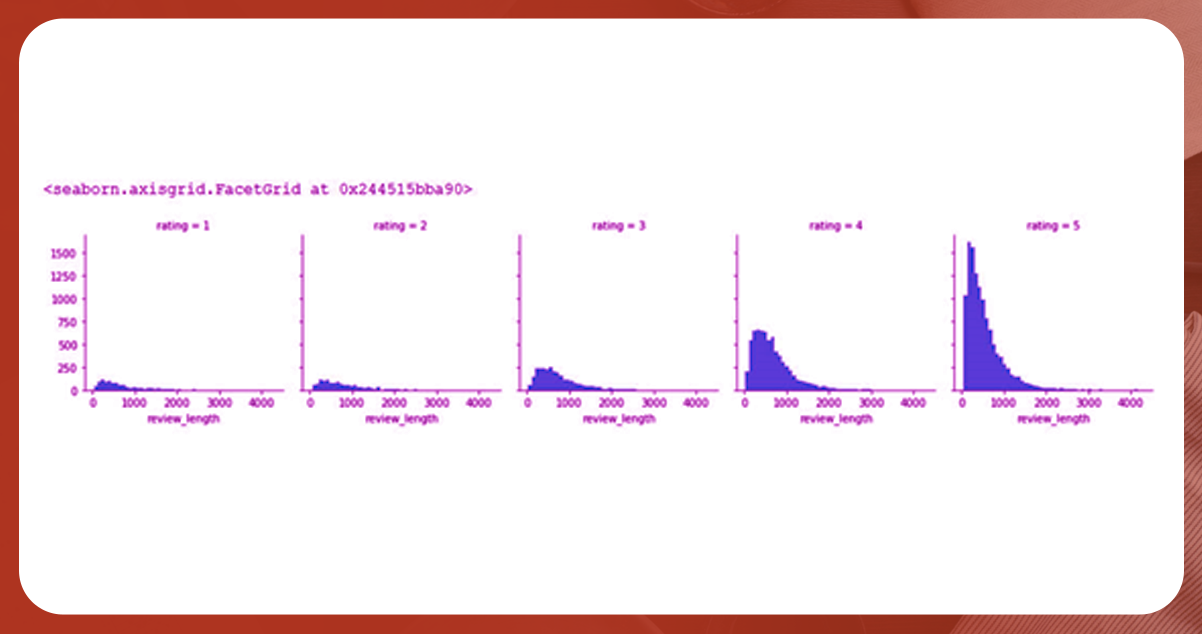

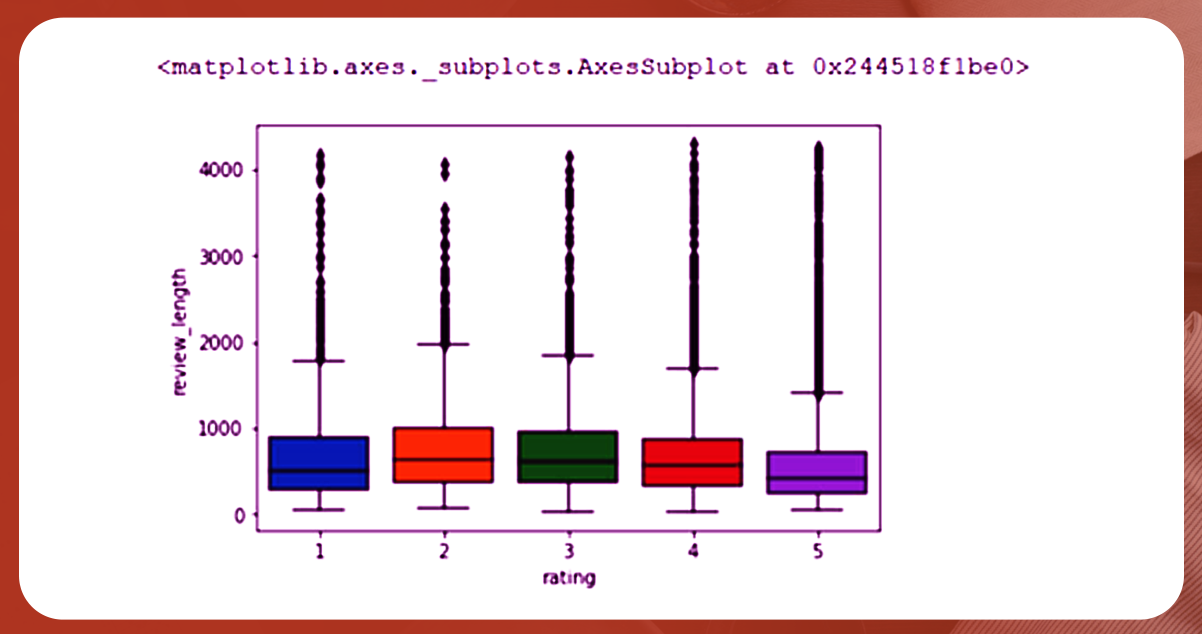

Now is the time to create a few graphs and study the information.

Code

Image

Code

Code

The box plot claims that comments with ratings of two and three stars have received more attention than those with five stars. Per the box plot, reviews with two and three stars reportedly received more attention from reviewers than those with five stars. However, there are numerous aberrations for every star review, according to the amount of dots just above the boxes. A post's length will thus be less helpful for any sentiment analysis.

How do Scrape Yelp restaurant reviews help in generating reviews from other restaurants? Learn More

Sentiment Analysis

To determine if a review is favorable or unfavorable, we will only consider ratings of 1 and 5. Let's set up a new data set to monitor the ratings between one and five.

Code

Out of the 23,869 records, 12,838 have scores of 1 or 5.

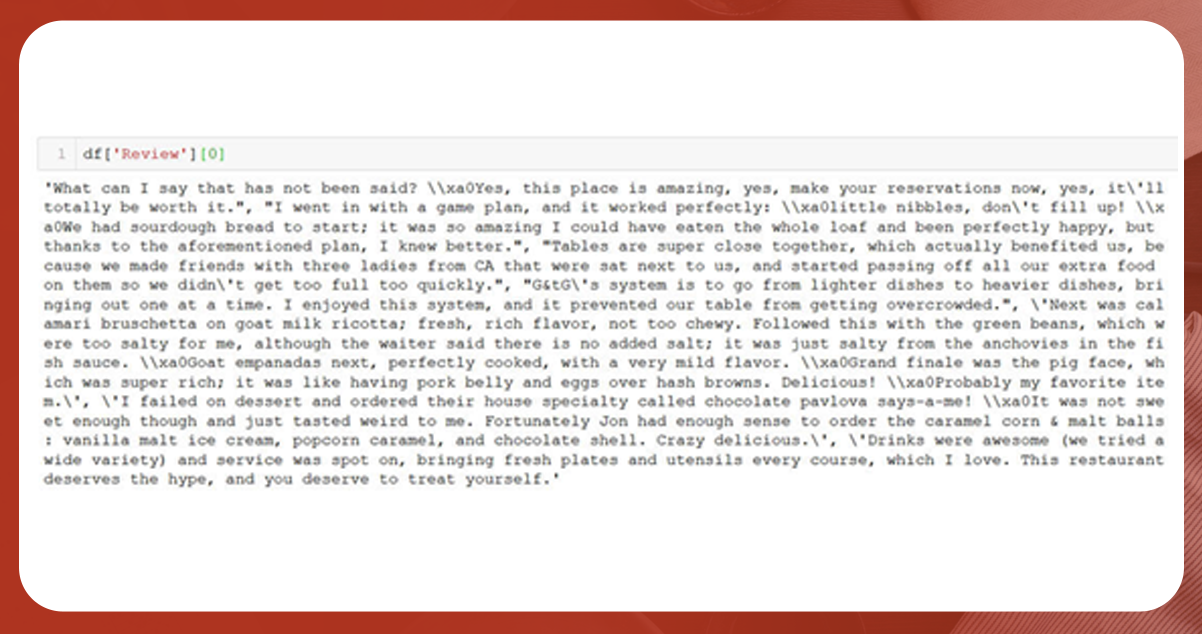

For future evaluation, you must organize the review content appropriately. Let's look at an example to understand what we are up against.

Image

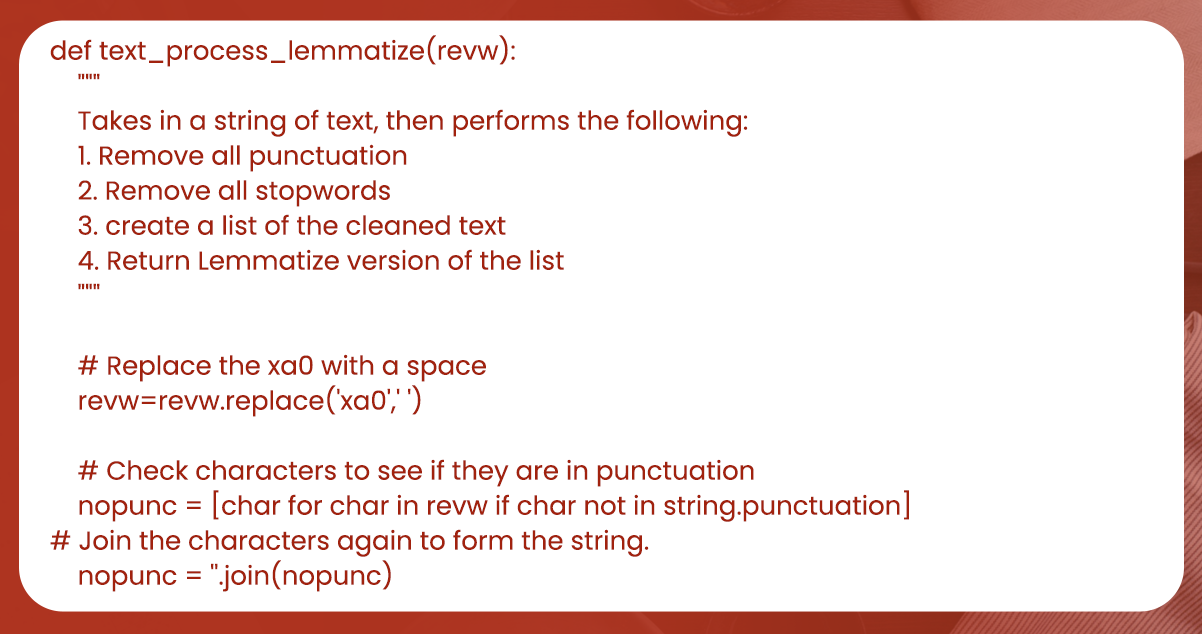

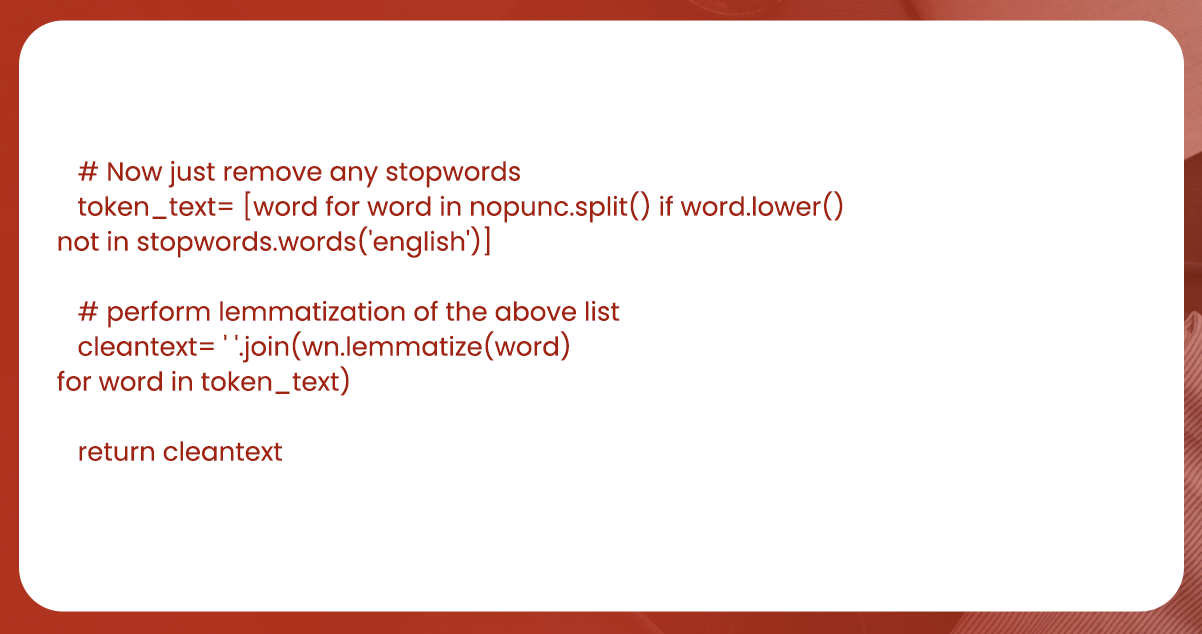

Many punctuation marks and specific unidentified codes, including 'xa0,' also seem to be present. 'xao' is a non-breaking whitespace in Latin1 (ISO 8859-1); see chr (160). You must change it with a space. Write a code that eliminates all punctuation, punctuates words, and lemmatizes the text immediately.

A bag of words is a collection of words that can be interpreted in any way, independent of their grammatical structure or order. Natural language processing frequently uses bags of words as a pre-processing step for texts. It is common to practice using the "bag of words" paradigm, which trains a classifier using the probability of every word.

Lemmatization

Lemmatization is grouping similar words into a single phrase or lemma for study. Lemmatization will always produce a comment in its dictionary form. For instance, the terms "typing" and "programming" will all be recognized as one word, "type." This function will be beneficial for our study.

Code

People often use these neutral punctuation marks. These can be disregarded since they are meaningless and have neither a good nor negative connotation.

Code

Let's manage our comment column using the function we just created.

Code

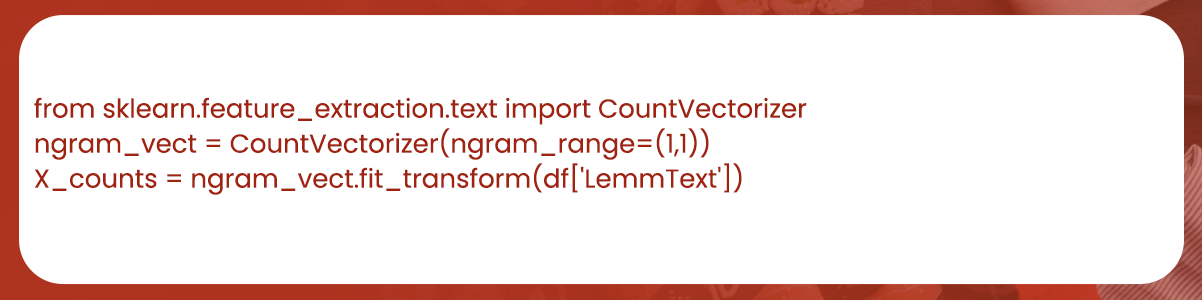

df['LemmText']=df['Review'].apply(text_process_lemmatize)Vectorization

You should transform Lemma collection within df into vectors and machine learning models. The process is known as vectorizing. The result of this technique is a matrix having each review represented as a row, each distinct lemma represented as a column, and each column representing the number of instances of each lemma. We employ the Count Vectorizer and N-grams method from the scikit-learn toolkit. We will discuss unigrams here.s

Code

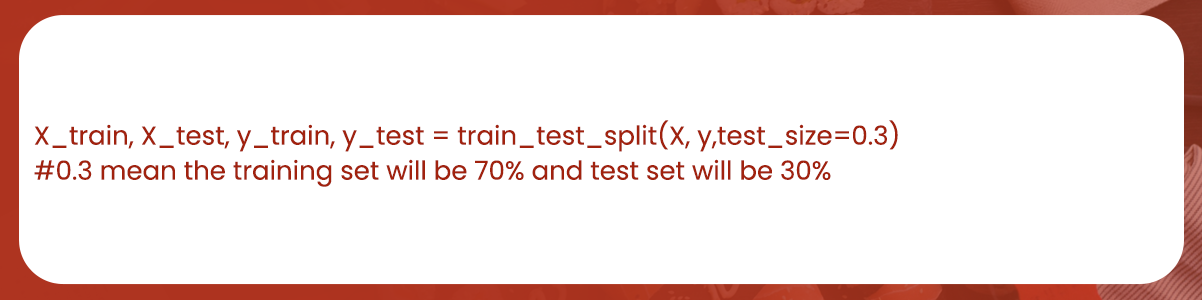

Train Test Split

Utilize scikit-train test split algorithms to generate training and testing data frames.

Code

Let's create a MultinomialNB model corresponding to the X and Y train data. We will use Multinomial Naive Bayes to identify sentiment. Critical comments are rated one star, while positive reviews are rated five stars.

Code

Let's now anticipate the test X test set.

Code

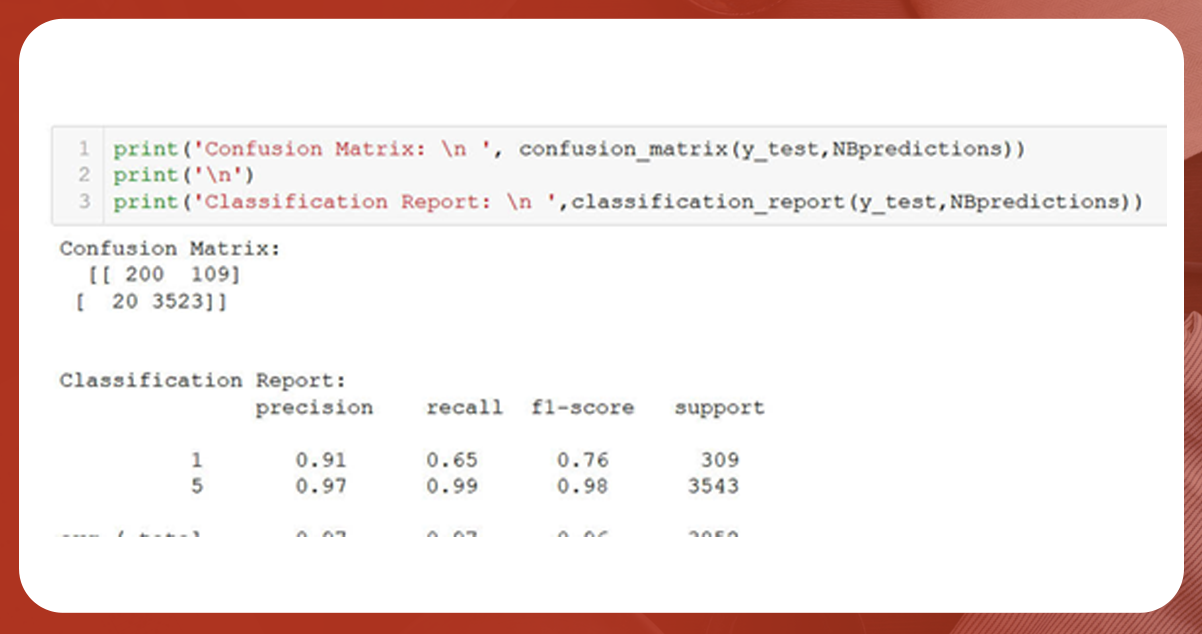

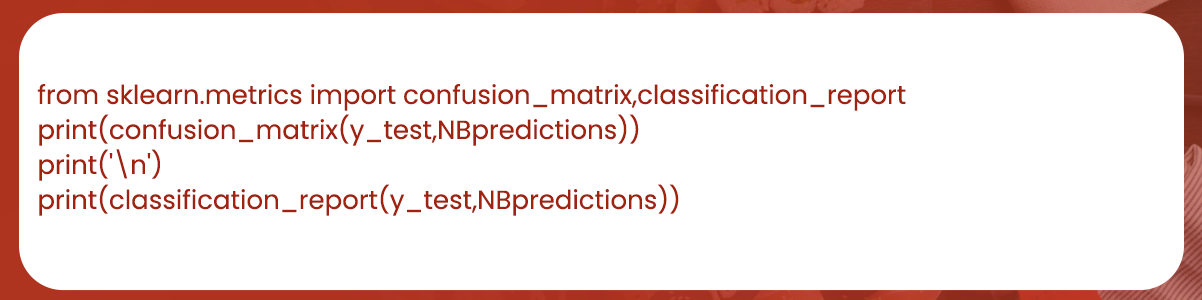

NBpredictions = nb.predict(X_test)Evaluation

Let's compare our model's predictions to the star ratings from the y test.

Image

Code

The program has a remarkable accuracy of 97%. Depending on the customer's feedback, this algorithm can estimate whether he prefers or dislikes the restaurant.

Want to Scrape Yelp Reviews data and other restaurant reviews? Call Food Data Scrape immediately or get a free quotation!

If you are looking for Food Data Scraping and Mobile Grocery App Scraping, mail us right now!